The Instant Smear Campaign Against Border Patrol Shooting Victim Alex Pretti

You’ve likely at least heard of Marion Stokes, the woman who constantly recorded television for over 30 years. She comes up on reddit and other places every so often as a hero archivist who fought against disinformation and disappearing history. But who was Marion Stokes, and why did she undertake this project? And more importantly, what happened to all of those tapes? Let’s take a look.

Marion was born November 25, 1929 in Germantown, Philadelphia, Pennsylvania. Noted for her left-wing beliefs as a young woman, she became quite politically active, and was even courted by the Communist Party USA to potentially become a leader. Marion was also involved in the civil rights movement.

For nearly 20 years, Marion worked as a librarian at the Free Library of Philadelphia until she was fired in the 1960s, which was likely a direct result of her political life. She married Melvin Metelits, a teacher and member of the Communist Party, and had a son named Michael with him.

Throughout this time, Marion was spied on by the FBI, to the point that she and her husband attempted to defect to Cuba. They were unsuccessful in securing Cuban visas, and separated in the mid-1960s when Michael was four.

Marion began co-producing a Sunday morning public-access talk show in Philadelphia called Input with her future husband John Stokes, Jr. The focus of the show was on social justice, and the point of the show was to get different types of people together to discuss things peaceably.

Marion’s taping began in 1979 with the Iranian Hostage Crisis, which coincided with the dawn of the twenty-four-hour news cycle. Her final tape is from December 14, 2012 — she recorded coverage of the Sandy Hook massacre as she passed away.

In 35 years of taping, Marion amassed 70,000 VHS and Beta-max tapes. She mostly taped various news outlets, fearing that the information would disappear forever. Her time in the television industry taught her that networks typically considered preservation too expensive, and therefore often reused tapes.

But Marion didn’t just tape the news. She also taped various programs such as The Cosby Show, Divorce Court, Nightline, Star Trek, The Oprah Winfrey Show, and The Today Show. Some of her collection includes 24/7 coverage of news networks, all of which was recorded on up to eight VCRs: 3-5 were going all day every day, and up to 8 would be taping if something special was happening. All family outings were planned around the six-hour VHS tape, and Marion would sometimes cut dinner short to go home and change the tapes.

People can’t take knowledge from you. — Marion Stokes

You might be wondering where she kept all the tapes, or how she could afford to do this, both financially and time-wise. For one thing, her second husband John Stokes, Jr. was already well off. For another, she was an early investor in Apple stock, using capital from her in-laws. To say she bought a lot of Macs is an understatement. According to the excellent documentary Recorder, Marion own multiples of every Apple product ever produced. Marion was a huge fan of technology and viewed it as a way of unlocking people’s potential. By the end of her life, she had nine apartments filled with books, newspapers, furniture, and multiples of any item she ever became obsessed with.

In addition to the creating this vast video archive, Marion took half a dozen daily newspapers and over 100 monthly periodicals, which she collected for 50 years. This is not to mention the 40-50,000 books in her possession. In one interview, Marion’s first husband Melvin Metelits has said that in the mid-1970s, the family would go to a bookstore and drop $800 on new books. That’s nearly $5,000 in today’s money.

It’s easy to understand why she started with VHS tapes — it was the late 1970s, and they were still the best option. When TiVo came along, Marion was not impressed, preferring not to expose her recording habits to any possible governments. And she had every right to be afraid, with her past.

Those in power are able to write their own history. — Marion Stokes

As for the why, there were several reasons. It was a form of activism, which partially defined Marion’s life. The rest I would argue was defined by this archive she amassed.

Marion started taping when the Iranian Hostage Crisis began. Shortly thereafter, the 24/7 news cycle was born, and networks reached into small towns in order to fill space. And that’s what she was concerned with — the effect that filling space would have on the average viewer.

Marion was obsessed with the way that media reflects society back upon itself. With regard to the hostage crisis, her goal was trying to reveal a set of agendas on the part of governments. Her first husband Melvin Metelits said that Marion was extremely fearful that America would replicate Nazi Germany.

The show Nightline was born from nightly coverage of the crisis. It aired at 11:30PM, which meant it had to compete with the late-night talk show hosts. And it did just fine, rising on the wings of the evening soap opera it was creating.

When Marion passed on December 14, 2012, news of the Sandy Hook massacre began to unfold. It was only after she took her last breath that her VCRs were switched off. Marion bequeathed the archive to her son Michael, who spent a year and half dealing with her things. He gave her books to a charity that teaches at-risk youth using secondhand materials, and he says he got rid of all the remaining Apples.

But no one would take the tapes. That is, until the Internet Archive heard about them. The tapes were hauled from Philadelphia to San Francisco, packed in banker’s boxes and stacked in four shipping containers.

So that’s 70,000 tapes at let’s assume six hours per tape, which totals 420,000 hours. No wonder the Internet Archive wasn’t finished digitizing the footage as of October 2025. That, and a lack of funding for the massive amount of manpower this must require.

If you want to see what they’ve uploaded so far, it’s definitely worth a look. And as long as you’re taking my advice, go watch the excellent documentary Recorder on YouTube. Check out the trailer embedded below.

Main and thumbnail images via All That’s Interesting

OPINION — A new location transparency feature on X is revealing foreign influence on American discourse just as federal agencies designed to deal with such threats are being dismantled.

Toward the end of November, X began listing account locations in the “About this account” section of people’s (or bots’) profiles. X also can list the platform through which users access the social media site, such as the web app or a region-specific app store.

With these new transparency features, X exposed that major MAGA influencers are likely operating from Eastern Europe, Africa, and Southeast Asia. And while anti-Trump profiles posing as Americans on X haven’t made headlines, the authors found one listing itself in Charlotte, NC that X indicates connected via the Nigeria App Store.

One factor driving foreign accounts to masquerade as domestic political commentators could be commercial gain. Heated political debate, abundant in the United States, drives engagement, which can be monetized. Account owners posing as Americans may also be funded or operated by America’s adversaries who seek to shape votes, increase social divisions, or achieve other strategic goals.

The problem of foreign adversaries pretending to be American is not new. During the cold war, Soviet KGB agents even posed as KKK members and sent hate mail to Olympic athletes before the 1984 summer Olympics. What is different now is the scale and speed of influence operations. The internet makes it dramatically easier for foreign adversaries to pose as Americans and infiltrate domestic discourse.

The past decade provides countless examples of Russia, China, and Iran targeting Americans with online influence operations. In 2022, a Chinese operation masqueraded as environmental activists in Texas to stoke protests against rare earth processing plants. Iran posed as the Proud Boys to send voters threatening emails before the 2020 elections. In 2014, Russia spread a hoax about a chemical plant explosion in Louisiana.

X’s new country of origin feature is a step in the right direction for combatting these operations. Using it, a BBC investigation revealed that multiple accounts advocating for Scottish independence connect to the platform via the Iran Android App. On first blush, this makes little sense. But Iran has a documented history of promoting Scottish independence through covert online influence operations and a track record of sowing discord wherever it can.

Sign up for the Cyber Initiatives Group Sunday newsletter, delivering expert-level insights on the cyber and tech stories of the day – directly to your inbox. Sign up for the CIG newsletter today.

Disclosing origin alone paints an incomplete picture. Identifying an account’s location does not always tell you who directs or funds the account. For example, Russia has previously outsourced its attempts to influence Americans to operators in Ghana and Nigeria. America’s adversaries continue to leverage proxies in their operations, as seen in a recently exposed Nigerian YouTube network aggressively spreading pro-Kremlin narratives.

Additionally, malign actors will likely still be able to spoof their location on X. Virtual private networks (VPNs) mask a user’s real IP address, and while X appears to flag suspected VPN use, the platform may have a harder time detecting residential proxies, which route traffic through a home IP address. Sophisticated operators and privacy enthusiasts will likely find additional ways to spoof their location. For example, TikTok tracks user locations but there are easy-to-find guides on how to change one’s apparent location.

The additional data points provided by X’s transparency feature, therefore, do not provide a shortcut to attributing a nation-state or other malign actor behind an influence operation. Proper attribution still requires thorough investigation, supported by both regional and technical expertise.

The Cipher Brief brings expert-level context to national and global security stories. It’s never been more important to understand what’s happening in the world. Upgrade your access to exclusive content by becoming a subscriber.

Social media platforms, private companies, and non-profits play a significant role in combatting online influence operations. Platforms have access to internal data — such as emails used to create an account and other technical indicators — that allow them to have a fuller picture about who is behind an account. Non-profits across the United States, Europe, Australia, and other aligned countries have also successfully exposed many influence operations in the past purely through open-source intelligence.

The U.S. government, however, plays a unique role in countering influence operations. Only governments have the authority to issue subpoenas, access sensitive sources, and impose consequences through sanctions and indictments.

Washington, however, has significantly reduced its capabilities to combat foreign malign influence. Over the past year, it has dismantled the FBI's Foreign Influence Task Force, shut down the State Department’s Global Engagement Center, and effectively dismantled the Foreign Malign Influence Center at the Office of the Director of National Intelligence. These changes make it unclear who — if anyone — within the U.S. government oversees countering influence operations undermining American interests at home and abroad.

X’s new transparency feature reveals yet again that America’s adversaries are waging near-constant warfare against Americans on the devices and platforms that profoundly shape our beliefs and behaviors. Now the U.S. government must rebuild its capacity to address it.

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals.

Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief, because national security is everyone’s business.EXPERT PERSPECTIVE — In recent years, the national conversation about disinformation has often focused on bot networks, foreign operatives, and algorithmic manipulation at industrial scale. Those concerns are valid, and I spent years inside CIA studying them with a level of urgency that matched the stakes. But an equally important story is playing out at the human level. It’s a story that requires us to look more closely at how our own instincts, emotions, and digital habits shape the spread of information.

This story reveals something both sobering and empowering: falsehood moves faster than truth not merely because of the technologies that transmit it, but because of the psychology that receives it. That insight is no longer just the intuition of intelligence officers or behavioral scientists. It is backed by hard data.

In 2018, MIT researchers Soroush Vosoughi, Deb Roy, and Sinan Aral published a groundbreaking study in Science titled The Spread of True and False News Online. It remains one of the most comprehensive analyses ever conducted on how information travels across social platforms.

The team examined more than 126,000 stories shared by 3 million people over a ten-year period. Their findings were striking. False news traveled farther, faster, and more deeply than true news. In many cases, falsehood reached its first 1,500 viewers six times faster than factual reporting. The most viral false stories routinely reached between 1,000 and 100,000 people, whereas true stories rarely exceeded a thousand.

One of the most important revelations was that humans, not bots, drove the difference. People were more likely to share false news because the content felt fresh, surprising, emotionally charged, or identity-affirming in ways that factual news often does not. That human tendency is becoming a national security concern.

For years, psychologists have studied how novelty, emotion, and identity shape what we pay attention to and what we choose to share. The MIT researchers echoed this in their work, but a broader body of research across behavioral science reinforces the point.

People gravitate toward what feels unexpected. Novel information captures our attention more effectively than familiar facts, which means sensational or fabricated claims often win the first click.

Emotion adds a powerful accelerant. A 2017 study published in the Proceedings of the National Academy of Sciences showed that messages evoking strong moral outrage travel through social networks more rapidly than neutral content. Fear, disgust, anger, and shock create a sense of urgency and a feeling that something must be shared quickly.

And identity plays a subtle, but significant role. Sharing something provocative can signal that we are well informed, particularly vigilant, or aligned with our community’s worldview. This makes falsehoods that flatter identity or affirm preexisting fears particularly powerful.

Taken together, these forces form what some have called the “human algorithm,” meaning a set of cognitive patterns that adversaries have learned to exploit with increasing sophistication.

Save your virtual seat now for The Cyber Initiatives Group Winter Summit on December 10 from 12p – 3p ET for more conversations on cyber, AI and the future of national security.

During my years leading digital innovation at CIA, we saw adversaries expand their strategy beyond penetrating networks to manipulating the people on those networks. They studied our attention patterns as closely as they once studied our perimeter defenses.

Foreign intelligence services and digital influence operators learned to seed narratives that evoke outrage, stoke division, or create the perception of insider knowledge. They understood that emotion could outpace verification, and that speed alone could make a falsehood feel believable through sheer familiarity.

In the current landscape, AI makes all of this easier and faster. Deepfake video, synthetic personas, and automated content generation allow small teams to produce large volumes of emotionally charged material at unprecedented scale. Recent assessments from Microsoft’s 2025 Digital Defense Report document how adversarial state actors (including China, Russia, and Iran) now rely heavily on AI-assisted influence operations designed to deepen polarization, erode trust, and destabilize public confidence in the U.S.

This tactic does not require the audience to believe a false story. Often, it simply aims to leave them unsure of what truth looks like. And that uncertainty itself is a strategic vulnerability.

If misguided emotions can accelerate falsehood, then a thoughtful and well-organized response can help ensure factual information arrives with greater clarity and speed.

One approach involves increasing what communication researchers sometimes call truth velocity, the act of getting accurate information into public circulation quickly, through trusted voices, and with language that resonates rather than lectures. This does not mean replicating the manipulative emotional triggers that fuel disinformation. It means delivering truth in ways that feel human, timely, and relevant.

Another approach involves small, practical interventions that reduce the impulse to share dubious content without thinking. Research by Gordon Pennycook and David Rand has shown that brief accuracy prompts (small moments that ask users to consider whether a headline seems true) meaningfully reduce the spread of false content. Similarly, cognitive scientist Stephan Lewandowsky has demonstrated the value of clear context, careful labeling, and straightforward corrections to counter the powerful pull of emotionally charged misinformation.

Sign up for the Cyber Initiatives Group Sunday newsletter, delivering expert-level insights on the cyber and tech stories of the day – directly to your inbox. Sign up for the CIG newsletter today.

Organizations can also help their teams understand how cognitive blind spots influence their perceptions. When people know how novelty, emotion, and identity shape their reactions, they become less susceptible to stories crafted to exploit those instincts. And when leaders encourage a culture of thoughtful engagement where colleagues pause before sharing, investigate the source, and notice when a story seems designed to provoke, it creates a ripple effect of more sound judgment.

In an environment where information moves at speed, even a brief moment of reflection can slow the spread of a damaging narrative.

A core part of this challenge involves reclaiming the mental space where discernment happens, what I refer to as Mind Sovereignty™. This concept is rooted in a simple practice: notice when a piece of information is trying to provoke an emotional reaction, and give yourself a moment to evaluate it instead.

Mind Sovereignty™ is not about retreating from the world or becoming disengaged. It is about navigating a noisy information ecosystem with clarity and steadiness, even when that ecosystem is designed to pull us off balance. It is about protecting our ability to think clearly before emotion rushes ahead of evidence.

This inner steadiness, in some ways, becomes a public good. It strengthens not just individuals, but the communities, organizations, and democratic systems they inhabit.

In the intelligence world, I always thought that truth was resilient, but it cannot defend itself. It relies on leaders, communicators, technologists, and more broadly, all of us, who choose to treat information with care and intention. Falsehood may enjoy the advantage of speed, but truth gains power through the quality of the minds that carry it.

As we develop new technologies and confront new threats, one question matters more than ever: how do we strengthen the human algorithm so that truth has a fighting chance?

All statements of fact, opinion, or analysis expressed are those of the author and do not reflect the official positions or views of the U.S. Government. Nothing in the contents should be construed as asserting or implying U.S. Government authentication of information or endorsement of the author's views.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief, because National Security is Everyone's Business.

U.S. focus on IW and its subset, cognitive warfare, has been erratic. The U.S. struggles with adapting its plans to the use of cognitive warfare while our leaders have consistently called for more expertise for this type of warfare. In 1962, President Kennedy challenged West Point graduates to understand: "another type of war, new in its intensity, ancient in its origin, that would require a whole new kind of strategy, a wholly different kind of force, forces which are too unconventional to be called conventional forces…" Over twenty years later, in 1987, Congress passed the Nunn-Cohen Amendment that established Special Operations Command (SOCOM) and the Defense Department’s Special Operations and Low-Intensity Conflict (SO/LIC) office. Another twenty years later, then Secretary of Defense Robert Gates said that DoD needed “to display a mastery of irregular warfare comparable to that which we possess in conventional combat.”

After twenty years of best practices of IW in the counter terrorism area, the 2020 Irregular Warfare Annex to the National Defense Strategy emphasized the need to institutionalize irregular warfare “as a core competency with sufficient, enduring capabilities to advance national security objectives across the spectrum of competition and conflict.” In December 2022, a RAND commentary pointed out that the U.S. military failed to master IW above the tactical level. I submit, we have failed because we have focused on technology at the expense of expertise and creativity, and that we need to balance technology with developing a workforce that thinks in a way that is different from the engineers and scientists that create our weapons and collection systems.

Adversaries Ahead of Us

IW and especially cognitive warfare is high risk and by definition uses manipulative practices to obtain results. Some policy leaders are hesitant to use this approach to develop influence strategies which has resulted in the slow development of tools and strategies to counter our adversaries. U.S. adversaries are experts at IW and do not have many of the political, legal, or oversight hurdles that U.S. IW specialists have.

Chinese military writings highlight the PRC’s use of what we would call IW in the three warfares. This involves using public opinion, legal warfare, and psychological operations to spread positive views of China and influence foreign governments in ways favorable to China. General Wang Haijiang, commander of the People's Liberation Army's (PLA) Western Theatre Command, wrote in an official People’s Republic of China (PRC) newspaper that the Ukraine war has produced a new era of hybrid warfare, intertwining “political warfare, financial warfare, technological warfare, cyber warfare, and cognitive warfare.” The PRC’s Belt and Road Initiative and Digital Silk Road are prime examples of using economic coercion as irregular warfare. Their Confucius Centers underscore how they are trying to influence foreign populations through language and cultural training.

Russia uses IW to attempt to ensure the battle is won before military operations begin and to enhance its conventional forces. Russia calls this hybrid war and we saw this with the use of “little green men” going into Crimea in 2014 and the use of the paramilitary Wagner forces around the world. Russia also has waged a disinformation campaign against the U.S. on digital platforms and even conducted assassinations and sabotage on foreign soil as ways to mold the battle space toward their goals.

What Is Needed

U.S. architects of IW seem to primarily focus on oversight structures and budget, and less on how to develop an enduring capability.

Through the counterterrorism fight, the U.S. learned how to use on-the-ground specialists, develop relationships at tribal levels, and understand cultures to influence the population. The U.S. has the tools and the lessons learned that would enable a more level playing field against its adversaries, but it is not putting enough emphasis on cognitive warfare. A key to the way forward is to develop SOF personnel and commensurate intelligence professionals to support the SOF community who understand the people, the geography, and the societies they are trying to influence and affect. We then must go further and reward creativity and cunning in developing cognitive warfare strategies.

The Department of Defense and the intelligence community have flirted with the need for expertise in the human domain or social cultural sphere for years. The Department of Defense put millions of dollars into socio cultural work in the 2015-time frame. This focus went away as we started concentrating more on near peer competition. Instead, we focused on technology, better weapons and more complex collection platforms as a way to compete with these adversaries. We even looked to cut Human Intelligence (HUMINT) to move toward what some call a lower risk approach to collection—using technology instead of humans.

SOF personnel are considered the military’s most creative members. They are chosen for their ability to adapt, blend in, and think outside the box. This ingenuity needs to be encouraged. We need a mindful balancing of oversight without stifling that uniqueness that makes IW so successful. While some of this creativity may come naturally, we need to ensure that we put in place training that speaks to inventiveness, that pulls out these members’ ability to think through the impossible. Focused military classes across the services must build on latest practices for underscoring creativity and out of the box thinking. This entrepreneurial approach is not typically rewarded in a military that is focused on planning, rehearsals, and more planning.

Need a daily dose of reality on national and global security issues? Subscriber to The Cipher Brief’s Nightcap newsletter, delivering expert insights on today’s events – right to your inbox. Sign up for free today.

Focusing on Intelligence and Irregular Warfare

An important part of the equation for irregular warfare is intelligence. This foundation for irregular warfare work is often left out in the examination of what is needed for the U.S. to move IW forward. In the SOF world, operators and intelligence professionals overlap more than in any other military space. Intelligence officers who support IW need to have the same creative mindset as the operators. They also need to be experts in their regional areas—just like the SOF personnel.

The intelligence community’s approach to personnel over the past twenty or so years works against support for IW. Since the fall of the Soviet Union, the intelligence community has moved from an expertise-based system to one that is more focused on processes. We used to have deep experts on all aspects of the adversary—analysts or collectors who had spent years focused on knowing everything about one foreign leader or one aspect of a country’s industry and with a deep knowledge of the language and culture of that country. With many more adversaries and with collection platforms that are much more expensive than those developed in the early days of the intelligence community, we cannot afford the detailed expert of yore anymore. The current premise is that if you know the processes for writing a good analytical piece or for being a good case officer, the community can plug and play you in any context. This means, we have put a premium on process while neglecting expertise. As with all things—we need to balance these two important aspects of intelligence work.

To truly understand and use IW, we need to develop expert regional analysts and human intelligence personnel. Those individuals who understand the human domain that they are studying. We need to understand how the enemy thinks to be able to provide that precision to the operator. This insight comes only after years of studying the adversary. We need to reward those experts and celebrate them just as much as we do the adaptable plug and play analyst or human intelligence personnel. Individuals who speak and understand the nuances of the languages of our adversaries, who understand the cultures and patterns of life are the SOF member’s best tool for advancing competition in IW. Developing this workforce must be a first thought, not an afterthought in the development of our irregular warfare doctrine.

CIA Director William Casey testified before Congress in 1981:

“The wrong picture is not worth a thousand words. No photo, no electronic impulse can substitute for direct on the scene knowledge of the key factors in a given country or region. No matter how spectacular a photo may be it cannot reveal enough about plans, intentions, internal political dynamics, economics, etc. Technical collection is of little help in the most difficult problem of all—political intentions. This is where clandestine human intelligence can make a difference.”

Not only are analytical experts important in support of IW but so are HUMINT experts. We have focused on technology to fill intelligence gaps to the detriment of human intelligence. The Defense Intelligence enterprise has looked for ways to cut its HUMINT capability when we should be increasing our use of HUMINT collection and HUMINT enabled intelligence activities. In 2020, Defense One reported on a Defense Intelligence Agency (DIA) plan to cut U.S. defense attaches in several West African countries and downgrade the ranks of others in eight countries. Many advocate for taking humans out of the loop as much as possible. The theory is that this lowers the risk for human capture or leaks. As any regional expert will tell you, while satellites and drones can provide an incredible amount of intelligence from pictures to bits of conversation, what they cannot provide is the context for those pictures or snippets of conversation. As Director Casey inferred, it is only the expert who has lived on the ground, among the people he/she is reporting on who can truly grasp nuances, understanding local contexts, allegiances, and sentiments.

While it is important to continue to upgrade technology and have specialists who fly drones and perform other data functions, those functions must be fused with human understanding of the adversary and the terrain. While algorithms can sift through vast amounts of data, human operatives and analysts ensure the contextual relevance of this data. Technologies cannot report on the nuances of feelings and emotions. The regional experts equip SOF operators with the nuanced understanding required to navigate the complexities that make up the “prior to bang” playing field. This expertise married with cunning and creativity will give us the tools we need to combat our adversary in the cognitive warfare domain.

The Cipher Brief brings expert-level context to national and global security stories. It’s never been more important to understand what’s happening in the world. Upgrade your access to exclusive content by becoming a subscriber.

Conclusion

The need for contextual, human-centric understanding for being able to develop plans and operations for cognitive warfare that can compete with our adversaries and keep us from a kinetic fight is paramount. Those who try to make warfare or intelligence into a science miss the truth, that to be proficient in either, art is a must. We need expertise to be able to decipher the stories, motives, and aspirations that make cognitive warfare unique. Regional intelligence experts discern the patterns, motives and vulnerabilities of adversaries; key needs for developing IW campaigns and for influencing individuals and societies. We need seasoned human intelligence personnel, targeters, and analysts who are experts on the adversary to be able to do this. We also need to develop and reward creativity, which is a must for this world.

We also have to be upfront and acknowledge the need to manipulate our adversaries. U.S. decision makers must concede that to win the next war, cognitive warfare is a must and it is essential for these leaders to take calculated risks to mount those campaigns to influence and manipulate.

The cost of cognitive warfare is but a rounding error when compared to the development of new technical intelligence collection platforms and the platforms’ massive infrastructures. This rounding error is a key lynchpin for irregular warfare and irregular warfare is our most likely avenue for avoiding a kinetic war. Human operatives, out of the box thinking, and expert analysts and human intelligence personnel are the needed bridges that connect data into actionable insights to allow our SOF community to practice the type of irregular warfare we have proven historically that the U.S..S. can provide and must provide to counter our adversaries and win the cognitive war we are currently experiencing.

Who’s Reading this? More than 500K of the most influential national security experts in the world. Need full access to what the Experts are reading?

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief because National Security is Everyone’s Business.

Even seemingly trivial posts can add to divisions already infecting the nation. Over the summer, a deepfake video depicting Rep. Alexandria Ocasio-Cortez (D-NY) discussing the perceived racist overtones of a jeans commercial went viral. At least one prominent news commentator was duped, sharing misinformation with his audience. While the origin of the fake is unknown, foreign adversaries, namely China, Russia, and Iran, often exploit domestic wedge issues to erode trust in elected officials.

Last year, Sen. Ben Cardin (D-MD) was deceived by a deepfake of Dmytro Kuleba, the former foreign minister of Ukraine, in an attempt to get the senator to reveal sensitive information about Ukrainian weaponry. People briefed on the FBI’s investigation into the incident suggest that the Russian government could be behind the deepfake, and that the Senator was being goaded into making statements that could be used for propaganda purposes.

In another incident, deepfake audio recordings of Secretary of State Marco Rubio deceived at least five government officials and three foreign ministers. The State Department diplomatic cable announcing the deepfake discovery also referenced an additional investigation into a Russia-linked cyber actor who had “posed as a fictitious department official.”

Meanwhile, researchers at Vanderbilt University’s Institute of National Security revealed that a Chinese company, GoLaxy, has used artificial intelligence to build psychological profiles of individuals including 117 members of Congress and 2,000 American thought leaders. Using these profiles, GoLaxy can tailor propaganda and target it with precision.

While the company denies that it — or its backers in the Chinese Communist Party — plan to use its advanced AI toolkit for influence operations against U.S. leaders, it allegedly has already done so in Hong Kong and Taiwan. Researchers say that in both places, GoLaxy profiled opposition voices and thought leaders and targeted them with curated messages on X (formerly Twitter), working to change their perception of events. The company also allegedly attempted to sway Hong Kongers’ views on a draconian 2020 national security law. That GoLaxy is now mapping America’s political leadership should be deeply concerning, but not surprising.

GoLaxy is far from the only actor reportedly using AI to influence public opinion. The same AI-enabled manipulation that now focuses on national leaders will inevitably be turned on mayors, school board members, journalists, CEOs — and eventually, anyone — deepening divisions in an already deeply divided nation.

Limiting the damage will require a coordinated response drawing on federal resources, private-sector innovation, and individual vigilance.

The Cipher Brief brings expert-level context to national and global security stories. It’s never been more important to understand what’s happening in the world. Upgrade your access to exclusive content by becoming a subscriber.

The White House has an AI Action Plan that lays out recommendations for how deepfake detection can be improved. It starts with turning the National Institute of Standards and Technology’s Guardians of Forensic Evidence deepfake evaluation program into formal guidelines. These guidelines would establish trusted standards that courts, media platforms, and consumer apps could use to evaluate deepfakes.

These standards are important because some AI-produced videos may be impossible to detect with the human eye. Instead, forensic tools can reveal deepfake giveaways. While far from perfect, this burgeoning deepfake detection field is adapting to rapidly evolving threats. Analyzing the distribution channels of deepfakes can also help determine their legitimacy, particularly for media outlets that want to investigate the authenticity of a video.

Washington must also coordinate with the tech industry, especially social media platforms, through the proposed AI Information Sharing and Analysis Center framework to build an early warning system to monitor, detect, and inform the public of influence operations exploiting AI-generated content.

The White House should also expand collaboration between the federal Cybersecurity and Infrastructure Security Agency, FBI, and the National Security Agency on deepfake responses. This combined team would work with Congress, agency leaders, and other prominent targets to minimize the spread of unauthorized synthetic content and debunk misleading information.

Lastly, public figures need to create rapid response communication playbooks to address the falsehoods head on and educate the public when deepfakes circulate. The United States can look to democratic allies like Taiwan for inspiration in how to deal with state-sponsored disinformation. The Taiwanese government has adopted the “222 policy” releasing 200 words and two photos within two hours of disinformation detection.

Deepfakes and AI-enabled influence campaigns represent a generational challenge to truth and trust. Combating this problem will be a cat-and-mouse game, with foreign adversaries constantly working to outmaneuver the safeguards meant to stop them. No individual solution will be enough to stop them, but by involving the government, the media, and individuals, it may be possible to limit their damage.

The Cipher Brief is committed to publishing a range of perspectives on national security issues submitted by deeply experienced national security professionals.

Opinions expressed are those of the author and do not represent the views or opinions of The Cipher Brief.

Have a perspective to share based on your experience in the national security field? Send it to Editor@thecipherbrief.com for publication consideration.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief

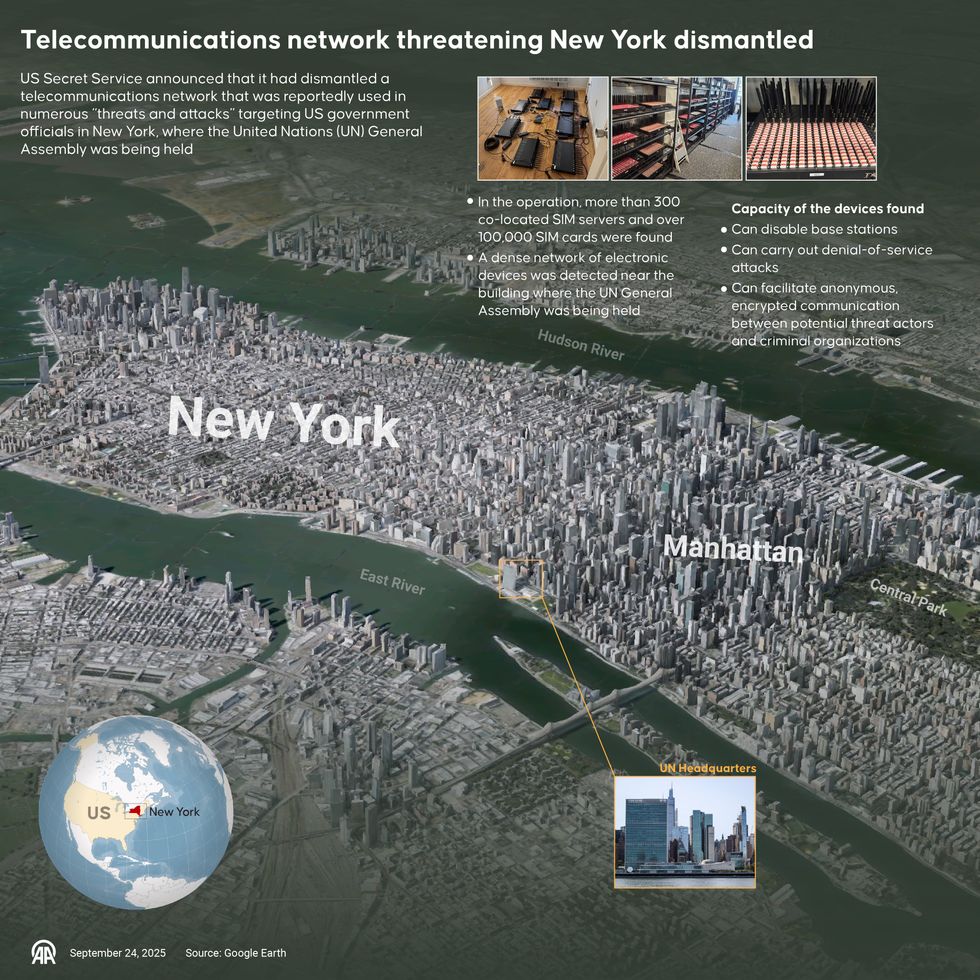

DEEP DIVE — When Secret Service agents swept into an inconspicuous building near the United Nations General Assembly late last month, they weren’t tracking guns or explosives. Instead, they dismantled a clandestine telecommunications hub that investigators say was capable of crippling cellular networks and concealing hostile communications.

According to federal officials, the operation seized more than 300 devices tied to roughly 100,000 SIM cards — an arsenal of network-manipulating tools that could disrupt the cellular backbone of New York City at a moment of geopolitical tension. The discovery, officials stressed, was not just a one-off bust but a warning sign of a much broader national security vulnerability.

The devices were designed to create what experts call a “SIM farm,” an industrial-scale operation where hundreds or thousands of SIM cards can be manipulated simultaneously. These setups are typically associated with financial fraud or bulk messaging scams. Still, the Secret Service warned that they can also be used to flood telecom networks, disable cell towers, and obscure the origin of communications.

In the shadow of the UN, where global leaders convene and security tensions are high, the proximity of such a system raised immediate questions about intent, attribution, and preparedness.

“(SIM farms) could jam cell and text services, block emergency calls, target first responders with fake messages, spread disinformation, or steal login codes,” Jake Braun, Executive Director of the Cyber Policy Initiative at the University of Chicago and former White House Acting Principal Deputy National Cyber Director, tells The Cipher Brief. “In short, they could cripple communications just when they’re needed most.”

Sign up for the Cyber Initiatives Group Sunday newsletter, delivering expert-level insights on the cyber and tech stories of the day – directly to your inbox. Sign up for the CIG newsletter today.

How SIM Farms Work

At their core, SIM farms exploit the fundamental architecture of mobile networks. Each SIM card represents a unique identity on the global communications grid. By cycling through SIMs at high speed, operators can generate massive volumes of calls, texts, or data requests that overwhelm cellular infrastructure. Such floods can mimic the effects of a distributed denial-of-service (DDoS) attack, except the assault comes through legitimate carrier channels rather than obvious malicious traffic.

“SIM farms are essentially racks of modems that cycle through thousands of SIM cards,” Dave Chronister, CEO of Parameter Security, tells The Cipher Brief. “Operators constantly swap SIM cards and device identifiers so traffic appears spread out rather than coming from a single source.”

That makes them extremely difficult to detect.

“They can mimic legitimate business texts and calls, hide behind residential internet connections, or scatter equipment across ordinary locations so there’s no single, obvious signal to flag,” Chronister continued. “Because SIM farms make it hard to tie a number back to a real person, they’re useful to drug cartels, human-trafficking rings and other organized crime, and the same concealment features could also be attractive to terrorists.”

That ability to blend in, experts highlight, is what makes SIM farms more than just a criminal nuisance.

While SIM farms may initially be used for financial fraud, their architecture can be easily repurposed for coordinated cyber-physical attacks. That dual-use nature makes them especially appealing to both transnational criminal groups and state-backed intelligence services.

Who Might Be Behind It?

The Secret Service, however, has not publicly attributed the network near the UN to any specific individual or entity. Investigators are weighing several possibilities: a transnational fraud ring exploiting the chaos of UN week to run large-scale scams, or a more concerning scenario where a state-backed group positioned the SIM farm as a contingency tool for disrupting communications in New York.

Officials noted that the operation’s sophistication suggested it was not a low-level criminal endeavor. The hardware was capable of sustained operations against multiple carriers, and its sheer scale — 100,000 SIM cards — far exceeded the typical scale of fraud schemes. That raised the specter of hostile governments probing U.S. vulnerabilities ahead of potential hybrid conflict scenarios.

Analysts note that Russia, China, and Iran have all been implicated in blending criminal infrastructure with state-directed cyber operations. Yet, these setups serve both criminals and nation-states, and attribution requires more details than are publicly available.

“Criminal groups use SIM farms to make money with scams and spam,” said Braun. “State actors can use them on a bigger scale to spy, spread disinformation, or disrupt communications — and sometimes they piggyback on criminal networks.”

One source in the U.S. intelligence community, who spoke on background, described that overlap as “hybrid infrastructure by design.”

“It can sit dormant as a criminal enterprise for years until a foreign government needs it. That’s what makes it so insidious,” the source tells The Cipher Brief.

From Chronister’s purview, the “likely explanation is that it’s a sophisticated criminal enterprise.”

“SIM-farm infrastructure is commonly run for profit and can be rented or resold. However, the criminal ecosystem is fluid: nation-states, terrorist groups, or hybrid actors can and do co-opt criminal capabilities when it suits them, and some state-linked groups cultivate close ties with criminal networks,” he said.

The Broader National Security Blind Spot

The incident during the United Nations General Assembly also underscores a growing blind spot in U.S. protective intelligence: telecommunications networks as contested terrain. For decades, federal resources have focused heavily on cybersecurity, counterterrorism, and physical threats. At the same time, the connective tissue of modern communications has often been treated as a commercial domain, monitored by carriers rather than security agencies.

The Midtown bust suggests that assumption no longer holds. The Secret Service itself framed the incident as a wake-up call.

“The potential for disruption to our country’s telecommunications posed by this network of devices cannot be overstated,” stated U.S. Secret Service Director Sean Curran. “The U.S. Secret Service’s protective mission is all about prevention, and this investigation makes it clear to potential bad actors that imminent threats to our protectees will be immediately investigated, tracked down and dismantled.”

However, experts warn that U.S. defenses remain fragmented. Carriers focus on fraud prevention, intelligence agencies monitor foreign adversaries, and law enforcement investigates domestic crime. The seams between those missions are precisely where SIM farms thrive.

The Cipher Brief brings expert-level context to national and global security stories. It’s never been more important to understand what’s happening in the world. Upgrade your access to exclusive content by becoming a subscriber.

Hybrid Warfare and the Next Front Line

The rise of SIM farms reflects the evolution of hybrid warfare, where the boundary between criminal activity and state action blurs, and adversaries exploit commercial infrastructure as a means of attack. Just as ransomware gangs can moonlight as proxies for hostile intelligence services, telecom fraud networks may double as latent disruption tools for foreign adversaries.

Additionally, the threat mirrors patterns observed abroad. In Ukraine, officials have reported Russian operations targeting cellular networks to disrupt battlefield communications and sow panic among civilians. In parts of Africa and Southeast Asia, SIM farms have been linked to both organized crime syndicates and intelligence-linked influence campaigns.

That same playbook, experts caution, could be devastating if applied in the heart of a global city.

“If activated during a crisis, such networks could flood phone lines, including 911 and embassy hotlines, to sow confusion and delay coordination. They can also blast fake alerts or disinformation to trigger panic or misdirect first responders, making it much harder for authorities to manage an already volatile situation,” Chronister said. “Because these setups are relatively cheap and scalable, they are an inexpensive but effective way to complicate emergency response, government decision-making, and even protective details.”

Looking Ahead

The dismantling of the clandestine telecom network in New York may have prevented an imminent crisis, but experts caution that it is unlikely to be the last of its kind. SIM farms are inexpensive to set up, scalable across borders, and often hidden in plain sight. They represent a convergence of cyber, criminal, and national security threats that the U.S. is only beginning to treat as a unified challenge.

When it comes to what needs to be done next, Braun emphasized the importance of “improving information sharing between carriers and government, investing in better tools to spot hidden farms, and moving away from SMS for sensitive logins.”

“Treat SIM farms as a national security threat, not just telecom fraud. Limit access to SIM farm hardware and punish abuse. Help smaller carriers strengthen defenses,” he continued. “And streamline legal steps so takedowns happen faster.”

Chronister acknowledged that while “carriers are much better than they were five or ten years ago, as they’ve invested in spam filtering and fraud analytics, attackers can still get through when they rotate SIMs quickly, use eSIM provisioning, or spread activity across jurisdictions.”

“Law enforcement and intelligence have powerful tools, but legal, technical, and cross-border constraints mean detection often outpaces confident attribution and rapid takedown. Make it harder to buy and cycle through SIMs in bulk and strengthen identity verification for phone numbers,” he added. “Require faster, real-time information-sharing between carriers and government during traffic spikes, improve authentication for public alerts, and run regular stress-tests and red-team exercises against telecom infrastructure. Finally, build joint takedown and mutual-assistance arrangements with allies so attackers can’t simply reconstitute operations in another country.”

Are you Subscribed to The Cipher Brief’s Digital Channel on YouTube? There is no better place to get clear perspectives from deeply experienced national security experts.

Read more expert-driven national security insights, perspective and analysis in The Cipher Brief because National Security is Everyone’s Business.

EXECUTIVE SUMMARY:

Ahead of the U.S. elections, adversaries are weaponizing social media to gain political sway. Russian and Iranian efforts have become increasingly aggressive and transparent. However, China appears to have taken a more carefully calculated and nuanced approach.

China’s seeming disinformation efforts have little to do with positioning one political candidate as preferable to another. Rather, the country’s maneuvers may aim to undermine trust in voting systems, elections and America, in general; amplifying criticism and sowing discord.

In recent months, the Chinese disinformation network, known as Spamouflage, has pursued “advanced deceptive behavior.” It has quietly launched thousands of accounts across more than 50 domains, and used them to target people across the United States.

The group has been active since 2017, but has recently reinforced its efforts.

The Spamouflage network’s fake online accounts present fake identities, which sometimes change on a whim. The accounts/profiles have been spotted on X, TikTok and elsewhere.

| For example: Harlan claimed to be a New York resident and an Army veteran, age 29. His profile picture showed a well-groomed young man. However, a few months later, his account shifted personas. Suddenly, Harlan appeared to be from Florida and a 31 year-old Republican influencer. At least four different accounts were found to mimic Trump supporters – part of a tactic with the moniker “MAGAflage.” |

The fake profiles, including the fake photos, may have been generated through artificial intelligence tools, according to analysts.

Accounts have exhibited certain patterns, using hashtags like #American, while presenting themselves as voters or groups that “love America” but feel alienated by political issues that range from women’s healthcare to Ukraine.

In June, one post on X read “Although I am American, I am extremely opposed to NATO and the behavior of the U.S. government in war. I think soldiers should protect their own country’s people and territory…should not initiate wars on their own…” The text was accompanied by an image showing NATO’s expansion across Europe.

Disinformation campaigns that create (and weaponize) fake profiles, as described above, will have a high degree of success when crafting and distributing phishing emails, as the emails will appear to come from credible sources.

This makes it essential for organizations to implement and for employees to adhere to advanced verification methods that can ensure the veracity of communications.

Within your organization, if you haven’t done so already, consider implementing the following:

To effectively counter threats, organizations need to pursue a dynamic, multi-dimensional approach. But it’s tough.

To get expert guidance, please visit our website or contact our experts. We’re here to help!

The post Spamouflage’s advanced deceptive behavior reinforces need for stronger email security appeared first on CyberTalk.