X Rolls out ‘Starterpacks’ for New Users to Discover Crypto, Tech Feeds

X is preparing to launch “Starterpacks,” an onboarding feature for new users to help discover the best accounts and feeds based on interests like crypto and technology.

Unveiled Thursday, X will roll out the feature in the coming weeks, Nikita Bier, Head of Product at X, noted.

Over the last few months, we scoured the world for the top posters in every niche & country

— Nikita Bier (@nikitabier) January 21, 2026

We've compiled them into a new tool called Starterpacks: to help new users find the best accounts—big or small—for their interestsReply below with a topic you're most interested in… pic.twitter.com/MYIIQAaJaL

The curated starterpack in the crypto category will comprise memecoin trading with real-time market trends and sentiment from active traders.

A short video posted by Bier showed the preview of how starterpacks work. It shows users selecting their interests while onboarding and following the curated list of accounts.

Crypto Twitter Backlash – Is X Trying to Revive it?

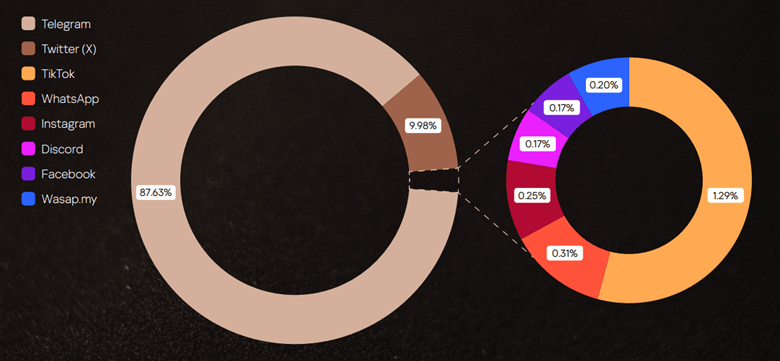

The announcement arrives days after Bier’s comments about crypto Twitter sparked backlash among the community. Crypto users have complained about the declining visibility of crypto content on X.

“Crypto Twitter (CT) is dying from suicide, not from the algorithm,” he wrote in response.

His response triggered growing frustration within the crypto community, with users believing that the platform is intentionally limiting crypto-related posts. Bier insisted that the issue is not tied to X’s algorithms.

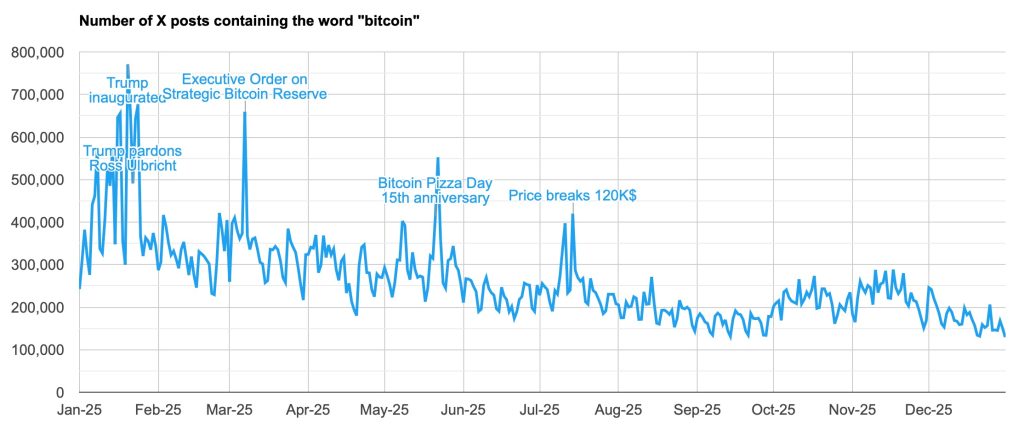

On Wednesday, Bitcoin cypherpunk Jameson Lopp wrote that there were 96 million posts on X containing ‘Bitcoin’ in 2025, a 32% drop year-over-year.

Although the data did not reflect overall crypto engagement, the post triggered concerns about discovery challenges and algorithmic shifts.

Vitalik Buterin Emphasized Better Crypto Social Media

In a separate post on Wednesday, Ethereum co-founder Vitalik Buterin stressed the need for better mass communication tools.

“We need mass communication tools that serve the user’s long-term interest, not maximize short-term engagement,” he wrote on X.

In 2026, I plan to be fully back to decentralized social.

— vitalik.eth (@VitalikButerin) January 21, 2026

If we want a better society, we need better mass communication tools. We need mass communication tools that surface the best information and arguments and help people find points of agreement. We need mass communication… https://t.co/ye249HsojJ

Further, he noted that crypto social projects has often been gone the wrong way.

“Decentralized social should be run by people who deeply believe in the “social” part, and are motivated first and foremost by solving the problems of social,” he added.

The post X Rolls out ‘Starterpacks’ for New Users to Discover Crypto, Tech Feeds appeared first on Cryptonews.