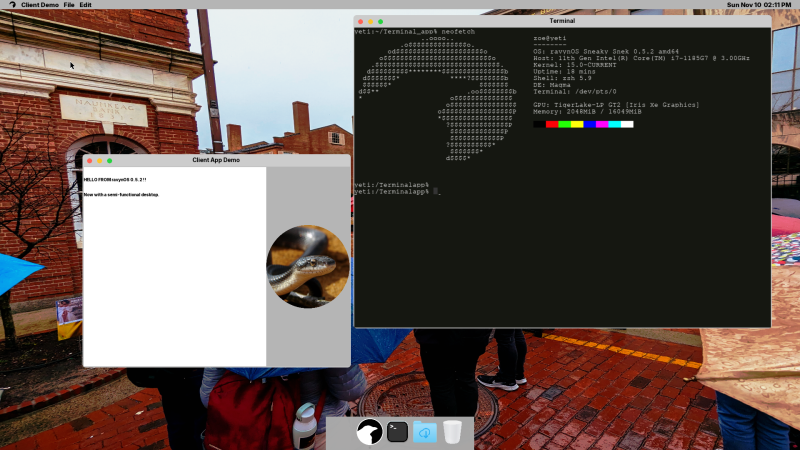

Creating User-Friendly Installers Across Operating Systems

After you have written the code for some awesome application, you of course want other people to be able to use it. Although simply directing them to the source code on GitHub or similar is an option, not every project lends itself to the traditional configure && make && make install, with often dependencies being the sticking point.

Asking the user to install dependencies and set up any filesystem links is an option, but having an installer of some type tackle all this is of course significantly easier. Typically this would contain the precompiled binaries, along with any other required files which the installer can then copy to their final location before tackling any remaining tasks, like updating configuration files, tweaking a registry, setting up filesystem links and so on.

As simple as this sounds, it comes with a lot of gotchas, with Linux distributions in particular being a tough nut. Whereas on MacOS, Windows, Haiku and many other OSes you can provide a single installer file for the respective platform, for Linux things get interesting.

Windows As Easy Mode

For all the flak directed at Windows, it is hard to deny that it is a stupidly easy platform to target with a binary installer, with equally flexible options available on the side of the end-user. Although Microsoft has nailed down some options over the years, such as enforcing the user’s home folder for application data, it’s still among the easiest to install an application on.

While working on the NymphCast project, I found myself looking at a pleasant installer to wrap the binaries into, initially opting to use the NSIS (Nullsoft Scriptable Install System) installer as I had seen it around a lot. While this works decently enough, you do notice that it’s a bit crusty and especially the more advanced features can be rather cumbersome.

This is where a friend who was helping out with the project suggested using the more modern Inno Setup instead, which is rather like the well-known InstallShield utility, except OSS and thus significantly more accessible. Thus the pipeline on Windows became the following:

- Install dependencies using vcpkg.

- Compile project using NMake and the MSVC toolchain.

- Run the Inno Setup script to build the

.exebased installer.

Installing applications on Windows is helped massively both by having a lot of freedom where to install the application, including on a partition or disk of choice, and by having the start menu structure be just a series of folders with shortcuts in them.

The Qt-based NymphCast Player application’s .iss file covers essentially such a basic installation process, while the one for NymphCast Server also adds the option to download a pack of wallpaper images, and asks for the type of server configuration to use.

Uninstalling such an application basically reverses the process, with the uninstaller installed alongside the application and registered in the Windows registry together with the application’s details.

MacOS As Proprietary Mode

Things get a bit weird with MacOS, with many application installers coming inside a DMG image or PKG file. The former is just a disk image that can be used for distributing applications, and the user is generally provided with a way to drag the application into the Applications folder. The PKG file is more of a typical installer as on Windows.

Of course, the problem with anything MacOS is that Apple really doesn’t want you to do anything with MacOS if you’re not running MacOS already. This can be worked around, but just getting to the point of compiling for MacOS without running XCode on MacOS on real Apple hardware is a bit of a fool’s errand. Not to mention Apple’s insistence on signing these packages, if you don’t want the end-user to have to jump through hoops.

Although I have built both iOS and OS X/MacOS applications in the past – mostly for commercial projects – I decided to not bother with compiling or testing my projects like NymphCast for Apple platforms without easy access to an Apple system. Of course, something like Homebrew can be a viable alternative to the One True Apple Way if you merely want to get OSS o MacOS. I did add basic support for Homebrew in NymphCast, but without a MacOS system to test it on, who knows whether it works.

if you merely want to get OSS o MacOS. I did add basic support for Homebrew in NymphCast, but without a MacOS system to test it on, who knows whether it works.

Anything But Linux

The world of desktop systems is larger than just Windows, MacOS and Linux, of course. Even mobile OSes like iOS and Android can be considered to be ‘desktop OSes’ with the way that they’re being used these days, also since many smartphones and tablets can be hooked up to to a larger display, keyboard and mouse.

How to bootstrap Android development, and how to develop native Android applications has been covered before, including putting APK files together. These are the typical Android installation files, akin to other package manager packages. Of course, if you wish to publish to something like the Google Play Store, you’ll be forced into using app bundles, as well as various ways to signing the resulting package.

The idea of using a package for a built-in package manager instead of an executable installer is a common one on many platforms, with iOS and kin being similar. On FreeBSD, which also got a NymphCast port, you’d create a bundle for the pkg package manager, although you can also whip up an installer. In the case of NymphCast there is a ‘universal installer’ built into the Makefile after compilation via the fully automated setup.sh shell script, using the fact that OSes like Linux, FreeBSD and even Haiku are quite similar on a folder level.

That said, the Haiku port of NymphCast is still as much of a Beta as Haiku itself, as detailed in the write-up which I did on the topic. Once Haiku is advanced enough I’ll be creating packages for its pkgman package manager as well.

The Linux Chaos Vortex

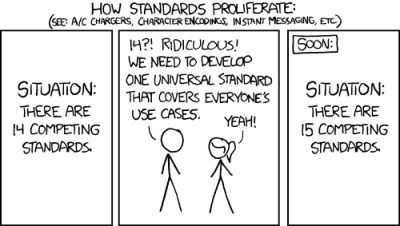

There is a simple, universal way to distribute software across Linux distributions, and it’s called the ‘tar.gz method’, referring to the time-honored method of distributing source as a tarball, for local compilation. If this is not what you want, then there is the universal RPM installation format which died along with the Linux Standard Base. Fortunately many people in the Linux ecosystem have worked tirelessly to create new standards which will definitely, absolutely, totally resolve the annoying issue of having to package your applications into RPMs, DEBs, Snaps, Flatpaks, ZSTs, TBZ2s, DNFs, YUMs, and other easily remembered standards.

There is a simple, universal way to distribute software across Linux distributions, and it’s called the ‘tar.gz method’, referring to the time-honored method of distributing source as a tarball, for local compilation. If this is not what you want, then there is the universal RPM installation format which died along with the Linux Standard Base. Fortunately many people in the Linux ecosystem have worked tirelessly to create new standards which will definitely, absolutely, totally resolve the annoying issue of having to package your applications into RPMs, DEBs, Snaps, Flatpaks, ZSTs, TBZ2s, DNFs, YUMs, and other easily remembered standards.

It is this complete and utter chaos with Linux distros which has made me not even try to create packages for these, and instead offer only the universal .tar.gz installation method. After un-tar-ing the server code, simply run setup.sh and lean back while it compiles the thing. After that, run install_linux.sh and presto, the whole shebang is installed without further ado. I also provided an uninstall_linux.sh script to complete the experience.

That said, at least one Linux distro has picked up NymphCast and its dependencies like Libnymphcast and NymphRPC into their repository: Alpine Linux. Incidentally FreeBSD also has an up to date package of NymphCast in its repository. I’m much obliged to these maintainers for providing this service.

Perhaps the lesson here is that if you want to get your neatly compiled and packaged application on all Linux distributions, you just need to make it popular enough that people want to use it, so that it ends up getting picked up by package repository contributors?

Wrapping Up

With so many details to cover, there’s also the easily forgotten topic that was so prevalent in the Windows installer section: integration with the desktop environment. On Windows, the Start menu is populated via simple shortcut files, while one sort-of standard on Linux (and FreeBSD as corollary) are Freedesktop’s XDC Desktop Entry files. Or .desktop files for short, which purportedly should give you a similar effect.

Only that’s not how anything works with the Linux ecosystem, as every single desktop environment has its own ideas on how these files should be interpreted, where they should be located, or whether to ignore them completely. My own experiences there are that relying on them for more advanced features, such as auto-starting a graphical application on boot (which cannot be done with Systemd, natch) without something throwing an XDG error or not finding a display is basically a fool’s errand. Perhaps that things are better here if you use KDE Plasma as DE, but this was an installer thing that I failed to solve after months of trial and error.

Long story short, OSes like Windows are pretty darn easy to install applications on, MacOS is okay as long as you have bought into the Apple ecosystem and don’t mind hanging out there, while FreeBSD is pretty simple until it touches the Linux chaos via X11 and graphical desktops. Meanwhile I’d strongly advise to only distribute software on Linux as a tarball, for your sanity’s sake.