SAP employees’ trust in leadership has diminished since the restructuring

SAP’s restructuring may have been good for its bottom line, but behind the scenes, it has backfired.

The company did what it promised, said Greyhound Research chief analyst Sanchit Vir Gogia: It wrapped up its restructuring plan, affecting 10,000 employees, by early 2025, kept headcount steady, and delivered strong financial results. But, he said, “Numbers only tell half the story. Inside the organization, something broke.”

That’s evidenced by a recent internal survey, which revealed that many staff no longer trust company leaders or their strategy.

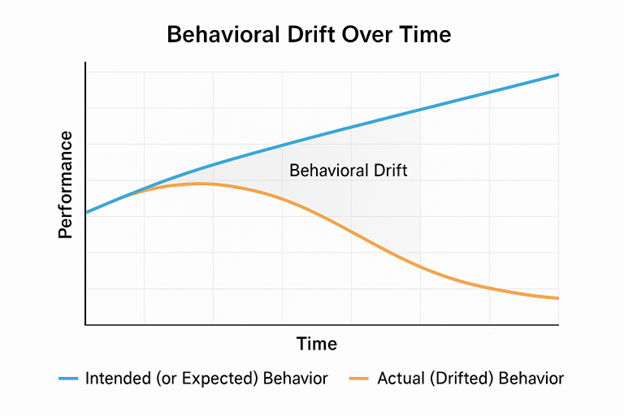

Trust in SAP’s executive board has fallen by six percentage points since April, to a mere 59%, Chief People Officer Gina Vargiu-Breuer wrote in an internal email seen by Bloomberg. In April 2021, that number was more than 80%, said Bloomberg, citing a local media report.

Confidence in SAP’s execution of its strategy has now dropped to 70%, from 77% in April of this year.

No time to learn

“That number drops even further in Germany, where only 38% say they fully trust leadership,” Gogia said. “And it’s not just a sentiment dip. Over 38,000 employees voiced specific concerns. The pattern is clear: confusion around new performance goals, not enough support to implement AI, unstable team dynamics, and barely any breathing room to learn.”

This isn’t resistance to change: “People get the vision,” he said. “The problem is executional load. You cannot drive large-scale transformation, especially AI-first initiatives, without building systems that carry your people with you.”

As part of SAP’s plan to transform into a skills-led company by 2028, 80% of employees were to be assigned to modernized job profiles by the middle of this year, SAP chief people officer Gina Vargiu-Breuer told financial analysts attending a company event in May. This was intended to “unleash AI-personalized growth opportunities” based on an “externally benchmarked global skills taxonomy” of 1,500 future-ready skills, she said. The company devotes 15% of working time to continuous personal development, she said.

In the recent email seen by Bloomberg, however, she admitted: “The feedback shows that not every step [in the transformation] has landed how it should.”

Unfiltered Pulse

In an email statement to CIO, SAP said that the Unfiltered Pulse survey that highlighted the issues “was designed to gather nuanced feedback from employees, focusing on both strengths and areas for improvement. Employees, for instance, have stated that helpful feedback as well as learning and development opportunities are supporting their growth. Results related to team culture are also positive worldwide.”

SAP said 84% of its more than 100,000 employees responded to the most recent of the surveys, conducted every six months, and while there were some positives this time, “the findings also clearly indicate that employee engagement and trust in the board require attention. Following increases in sentiment in the previous iteration, there has been a decrease in the recent Pulse survey. We greatly value this feedback and are taking targeted action in response. We are therefore implementing specific measures to address the input from our employees and to drive meaningful change.”

SAP has not revealed details of these measures.

However, Info-Tech Research Group senior advisory analyst Yaz Palanichamy said, “SAP employees feel a sense of disillusionment as a result of the efforts undertaken in supporting this restructuring program.”

While SAP had framed the initiative as a strategic pivot towards embracing scalable cloud and AI growth, he said, the sheer number of roles affected, and the way that number ballooned well beyond the original target of 8,000, has left many of SAP’s employees concerned about their jobs and about senior leadership.

They worry about organizational stability and clarity, and about whether there is adequate support for their reskilling, he said: “If the cultural and operational gaps concerning morale, talent retention, and organizational role alignment are not proactively addressed, this could severely hinder SAP’s growth ambitions [in cloud and AI].”

SAP is not alone

SAP isn’t the only company that has faced these issues, Gogia pointed out. “Look beyond SAP, and the same symptoms show up elsewhere. Salesforce saw trust scores crater after its 2023 layoffs. Oracle’s morale took a hit when staff felt shut out of decisions. But SAP’s case stands out because performance at the top was solid, yet employee confidence eroded underneath. That divergence is dangerous. When momentum at the surface isn’t backed by alignment at the core, cracks appear in delivery, consistency breaks down, and partners feel the wobble. Execution doesn’t fail all at once. It frays. Quietly. Progressively.”

SAP isn’t ignoring the issues, he noted. It has begun to take action, appointing leaders, communicating priorities, and revisiting how teams are measured.

“That’s good,” he said. “But the real fix will come not from announcements but from behavioral evidence. Trust comes back when people stop guessing what’s next, when systems stabilize, when leaders stay visible, when workloads balance out.”