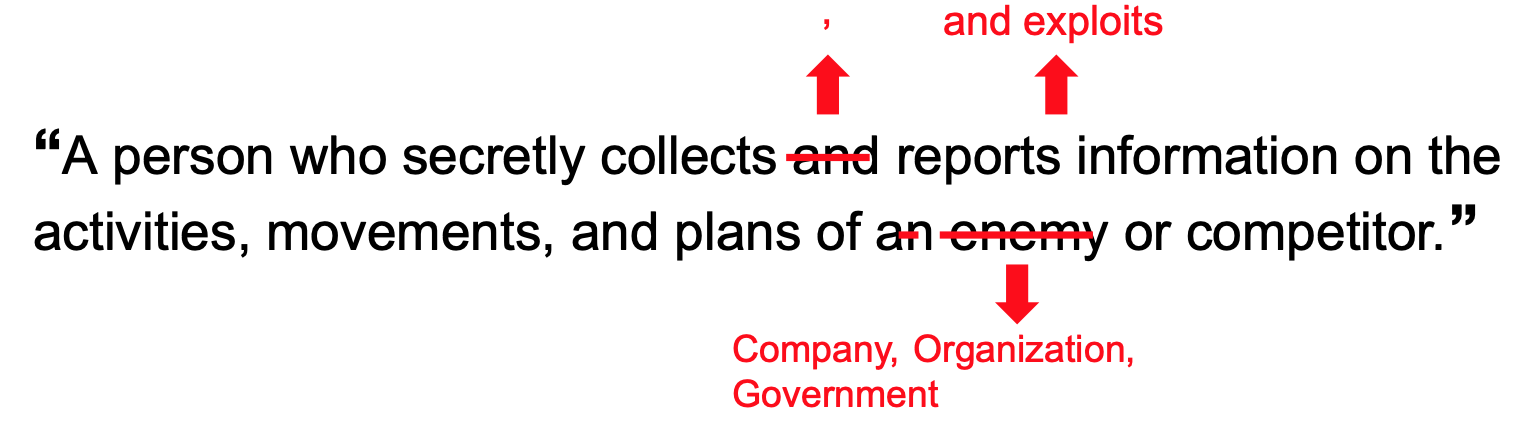

Open Source Intelligence (OSINT): Strategic Techniques for Finding Info on X (Twitter)

Welcome back, my aspiring digital investigators!

In the rapidly evolving landscape of open source intelligence, Twitter (now rebranded as X) has long been considered one of the most valuable platforms for gathering real-time information, tracking social movements, and conducting digital investigations. However, the platform’s transformation under Elon Musk’s ownership has fundamentally altered the OSINT landscape, creating unprecedented challenges for investigators who previously relied on third-party tools and API access to conduct their research.

The golden age of Twitter OSINT tools has effectively ended. Applications like Twint, GetOldTweets3, and countless browser extensions that once provided investigators with powerful capabilities to search historical tweets, analyze user networks, and extract metadata have been rendered largely useless by the platform’s new API restrictions and authentication requirements. What was once a treasure trove of accessible data has become a walled garden, forcing OSINT practitioners to adapt their methodologies and embrace more sophisticated, indirect approaches to intelligence gathering.

This fundamental shift represents both a challenge and an opportunity for serious digital investigators. While the days of easily scraping massive datasets are behind us, the platform still contains an enormous wealth of information for those who understand how to access it through alternative means. The key lies in understanding that modern Twitter OSINT is no longer about brute-force data collection, but rather about strategic, targeted analysis using techniques that work within the platform’s new constraints.

Understanding the New Twitter Landscape

The platform’s new monetization model has created distinct user classes with different capabilities and visibility levels. Verified subscribers enjoy enhanced reach, longer post limits, and priority placement in replies and search results. This has created a new dynamic where information from paid accounts often receives more visibility than content from free users, regardless of its accuracy or relevance. For OSINT practitioners, this means understanding these algorithmic biases is essential for comprehensive intelligence gathering.

The removal of legacy verification badges and the introduction of paid verification has also complicated the process of source verification. Previously, blue checkmarks provided a reliable indicator of account authenticity for public figures, journalists, and organizations. Now, anyone willing to pay can obtain verification, making it necessary to develop new methods for assessing source credibility and authenticity.

Content moderation policies have also evolved significantly, with changes in enforcement priorities and community guidelines affecting what information remains visible and accessible. Some previously available content has been removed or restricted, while other types of content that were previously moderated are now more readily accessible. Also, the company updated its terms of service to officially say it uses public tweets to train its AI.

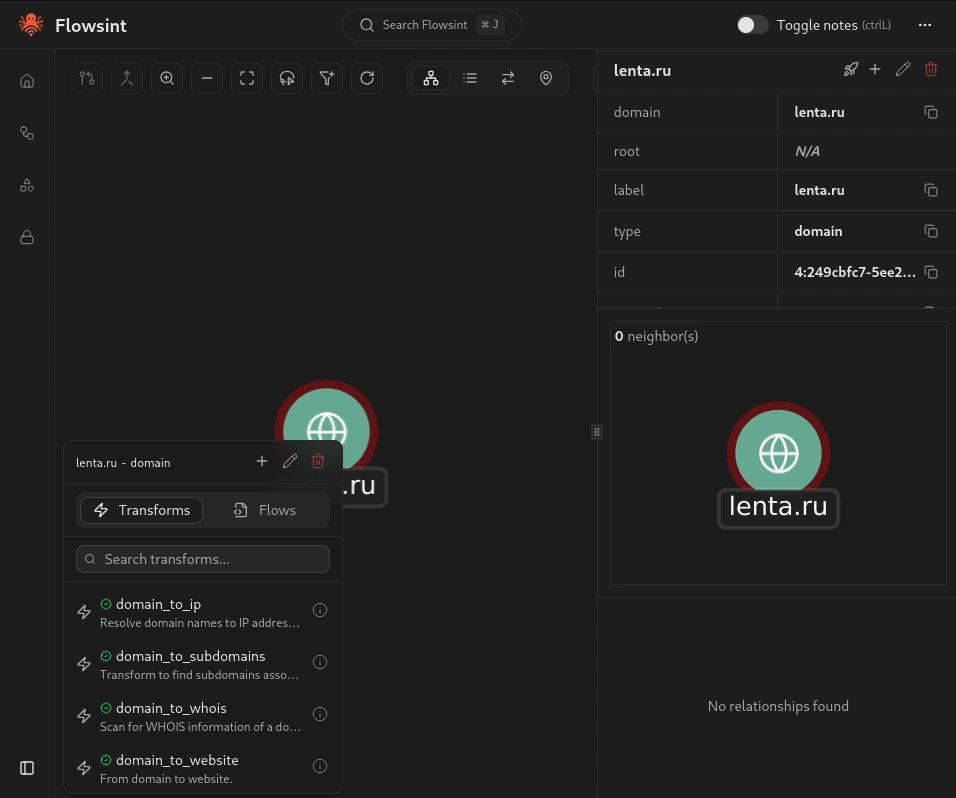

Search Operators

The foundation of effective Twitter OSINT lies in knowing how to craft precise search queries using X’s advanced search operators. These operators allow you to filter and target specific information with remarkable precision.

You can access the advanced search interface through the web version of X, but knowing the operators allows you to craft complex queries directly in the search bar.

Here are some of the most valuable search operators for OSINT purposes:

from:username – Shows tweets only from a specific user

to:username – Shows tweets directed at a specific user

since:YYYY-MM-DD – Shows tweets after a specific date

until:YYYY-MM-DD – Shows tweets before a specific date

near:location within:miles – Shows tweets near a location

filter:links – Shows only tweets containing links

filter:media – Shows only tweets containing media

filter:images – Shows only tweets containing images

filter:videos – Shows only tweets containing videos

filter:verified – Shows only tweets from verified accounts

-filter:replies – Excludes replies from search results

#hashtag – Shows tweets containing a specific hashtag

"exact phrase" – Shows tweets containing an exact phrase

For example, to find tweets from a specific user about cybersecurity posted in the first half of 2025, you could use:

from:username cybersecurity since:2024-01-01 until:2024-06-30

The power of these operators becomes apparent when you combine them. For instance, to find tweets containing images posted near a specific location during a particular event:

near:Moscow within:5mi filter:images since:2023-04-15 until:2024-04-16 drone

This would help you find images shared on X during the drone attack on Moscow.

Profile Analysis and Behavioral Intelligence

Every Twitter account leaves digital fingerprints that tell a story far beyond what users intend to reveal.

The Account Creation Time Signature

Account creation patterns often expose coordinated operations with startling precision. During investigation of a corporate disinformation campaign, researchers discovered 23 accounts created within a 48-hour window in March 2023—all targeting the same pharmaceutical company. The accounts had been carefully aged for six months before activation, but their synchronized birth dates revealed centralized creation despite using different IP addresses and varied profile information.

Username Evolution Archaeology: A cybersecurity firm tracking ransomware operators found that a key player had changed usernames 14 times over two years, but each transition left traces. By documenting the evolution @crypto_expert → @blockchain_dev → @security_researcher → @threat_analyst, investigators revealed the account operator’s attempt to build credibility in different communities while maintaining the same underlying network connections.

Visual Identity Intelligence: Profile image analysis has become remarkably sophisticated. When investigating a suspected foreign influence operation, researchers used reverse image searches to discover that 8 different accounts were using professional headshots from the same stock photography session—but cropped differently to appear unrelated. The original stock photo metadata revealed it was purchased from a server in Eastern Europe, contradicting the accounts’ claims of U.S. residence.

Temporal Behavioral Fingerprinting

Human posting patterns are as unique as fingerprints, and investigators have developed techniques to extract extraordinary intelligence from timing data alone.

Geographic Time Zone Contradictions: Researchers tracking international cybercriminal networks identified coordination patterns across supposedly unrelated accounts. Five accounts claiming to operate from different U.S. cities all showed posting patterns consistent with Central European Time, despite using location-appropriate slang and cultural references. Further analysis revealed they were posting during European business hours while American accounts typically show evening and weekend activity.

Automation Detection Through Micro-Timing: A social media manipulation investigation used precise timestamp analysis to identify bot behavior. Suspected accounts were posting with unusual regularity—exactly every 3 hours and 17 minutes for weeks. Human posting shows natural variation, but these accounts demonstrated algorithmic precision that revealed automated management despite otherwise convincing content.

Network Archaeology and Relationship Intelligence

Twitter’s social graph remains one of its most valuable intelligence sources, requiring investigators to become expert relationship analysts.

The Early Follower Principle: When investigating anonymous accounts involved in political manipulation, researchers focus on the first 50 followers. These early connections often reveal real identities or organizational affiliations before operators realize they need operational security. In one case, an anonymous political attack account’s early followers included three employees from the same PR firm, revealing the operation’s true source.

Mutual Connection Pattern Analysis: Intelligence analysts investigating foreign interference discovered sophisticated relationship mapping. Suspected accounts showed carefully constructed following patterns—they followed legitimate journalists, activists, and political figures to appear authentic, but also maintained subtle connections to each other through shared follows of obscure accounts that served as coordination signals.

Reply Chain Forensics: A financial fraud investigation revealed coordination through reply pattern analysis. Seven accounts engaged in artificial conversation chains to boost specific investment content. While the conversations appeared natural, timing analysis showed responses occurred within 30-45 seconds consistently—far faster than natural reading and response times for the complex financial content being discussed.

Systematic Documentation and Intelligence Development

The most successful profile analysis investigations employ systematic documentation techniques that build comprehensive intelligence over time rather than relying on single-point assessments.

Behavioral Baseline Establishment: Investigators spend 2-4 weeks establishing normal behavioral patterns before conducting anomaly analysis. This baseline includes posting frequency, engagement patterns, topic preferences, language usage, and network interaction patterns. Deviations from established baselines indicate potential significant developments.

Multi-Vector Correlation Analysis: Advanced investigations combine temporal, linguistic, network, and content analysis to build confidence levels in conclusions. Single indicators might suggest possibilities, but convergent evidence from multiple analysis vectors provides actionable intelligence confidence levels above 85%.

Summary

Predictive Behavior Modeling: The most sophisticated investigators use historical pattern analysis to predict likely future behaviors and optimal monitoring strategies. Understanding individual behavioral patterns enables investigators to anticipate when targets are most likely to post valuable intelligence or engage in significant activities.

Modern Twitter OSINT now requires investigators to develop cross-platform correlation skills and collaborative intelligence gathering approaches. While the technical barriers have increased significantly, the platform remains valuable for those who understand how to leverage remaining accessible features through creative, systematic investigation techniques.

To improve your OSINT skills, check out our OSINT Investigator Bundle. You’ll explore both fundamental and advanced techniques and receive an OSINT Certified Investigator Voucher.