Navigating cybersecurity challenges in the early days of Agentic AI

As we continue to evolve the field of AI, a new branch that has been accelerating recently is Agentic AI. Multiple definitions are circulating, but essentially, Agentic AI involves one or more AI systems working together to accomplish a task using tools in an unsupervised fashion. A basic example of this is tasking an AI Agent with finding entertainment events I could attend during summer and emailing the options to my family.

Agentic AI requires a few building blocks, and while there are many variants and technical opinions on how to build, the basic implementation typically includes a Reasoning LLM (Large Language Model) – like the ones behind ChatGPT, Claude, or Gemini – that can invoke tools, such as an application or function to perform a task and return results. A tool can be as simple as a function that returns the weather, or as complex as a browser commanding tool that can navigate through websites.

While this technology has a lot of potential to augment human productivity, it also comes with a set of challenges, many of which haven’t been fully considered by the technologists working on such systems. In the cybersecurity industry, one of the core principles we all live by is implementing “security by design”, instead of security being an afterthought. It is under this principle that we explore the security implications (and threats) around Agentic AI, with the goal of bringing awareness to both consumers and creators:

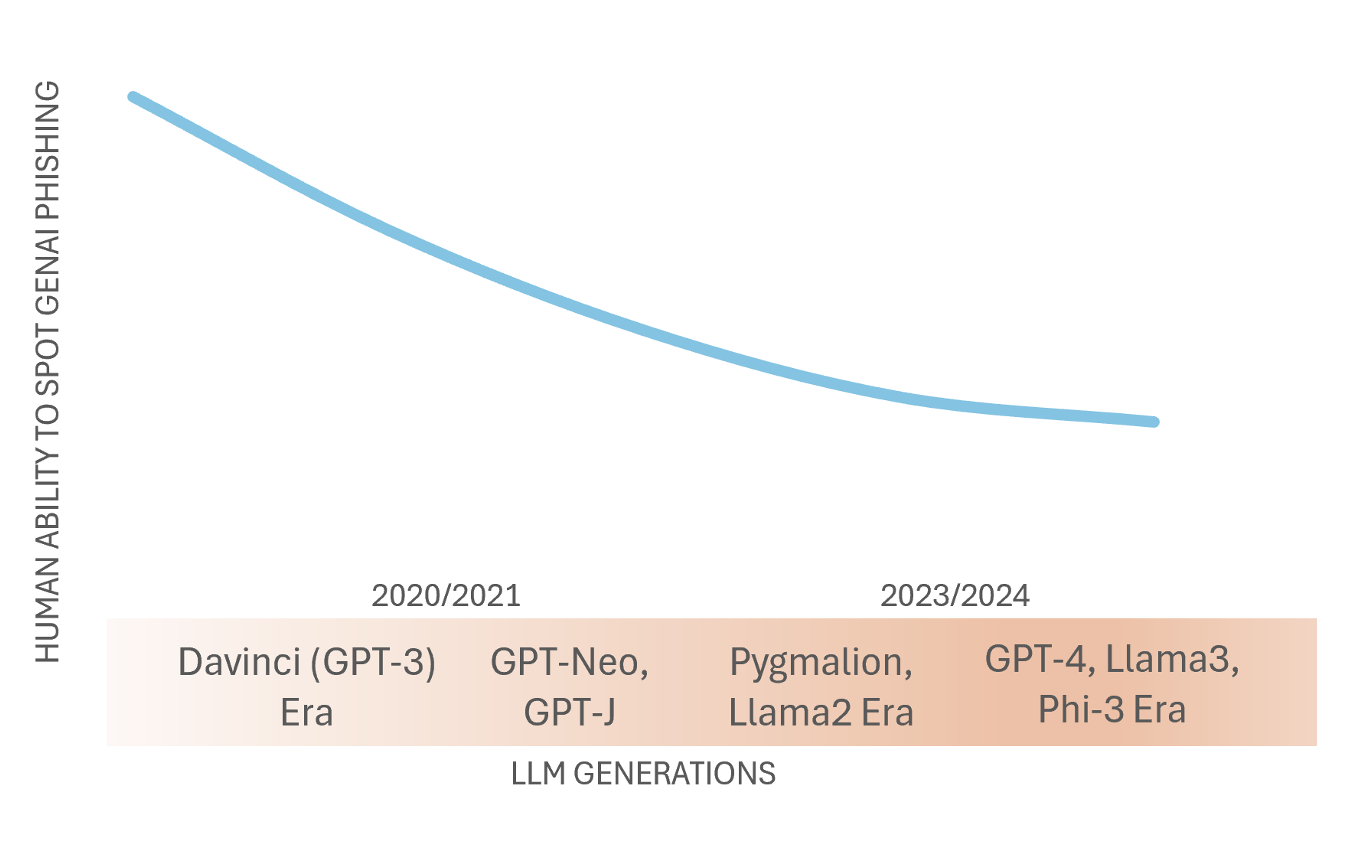

- As of today, Agentic AI has to meet a high bar to be fully adopted in our daily lives. Think about the precision required for billing or healthcare related tasks, or the level of trust customers would need to have to delegate sensitive tasks that could have financial or legal consequences. However, bad actors do not play by the same rules and do not require any “high bar” to leverage this technology to compromise victims. For example, a bad actor using Agentic AI to automate the process of researching (social engineering) and targeting victims with phishing emails is satisfied with an imperfect system that is only reliable 60% of the time, because that’s still better than attempting to manually do it, and the consequences associated with “AI errors” in this scenario are minimum for cybercriminals. In another recent example, Claude AI was exploited to orchestrate a campaign that created and managed fake personas (bots) on social media platforms, automatically interacting with carefully selected users to manipulate political narratives. Consequently, one of the threats that is likely to be fueled by malicious AI Agents is scams, regardless of these being delivered by text, email or deepfake video. As seen in recent news, crafting a convincing deepfake video, writing a phishing email or leveraging the latest trend to scam people with fake toll texts is, for bad actors, easier than ever thanks to a plethora of AI offerings and advancements. In this regard, AI Agents have the potential to continue increasing the ROI (Return on Investment) for cybercriminals, by automating aspects of the scam campaign that have been manual so far, such as tailoring messages to target individuals or creating more convincing content at scale.

- Agentic AI can be abused or exploited by cybercriminals, even when the AI agent is in the hands of a legitimate user. Agentic AI can be quite vulnerable if there are injection points. For example, AI Agents can communicate and take actions by interacting in a standardized fashion using what is known as MCP (Model Context Protocol). The MCP acts as some sort of repository where a bad actor could host a tool with a dual purpose. For example, a threat actor can offer a tool/integration via MCP that on the surface helps an AI browse the web, but behind the scenes, it exfiltrates data/arguments given by the AI. Or by the same token, an Agentic AI reading let’s say emails to summarize them for you could be compromised by a carefully crafted “malicious email” (known as indirect prompt injection) sent by the cybercriminal to redirect the thought process of such AI, deviating it from the original task (summarizing emails) and going rogue to accomplish a task orchestrated by the bad actor, like stealing financial information from your emails.

- Agentic AI also introduces vulnerabilities through inherently large chances of error. For instance, an AI agent tasked with finding a good deal for buying marketing data could end up in a rabbit hole buying illegal data from a breached database on the dark web, even though the legitimate user never intended to. While this is not triggered by a bad actor, it is still dangerous given the large number of possibilities on how an AI Agent can behave, or derail, given a poor choice of task description.

With the proliferation of Agentic AI, we will see both opportunities to make our life better as well as new threats from bad actors exploiting the same technology for their gain, by either intercepting and poisoning legitimate users AI Agents, or using Agentic AI to perpetuate attacks. With this in mind, it’s more important than ever to remain vigilant, exercise caution and leverage comprehensive cybersecurity solutions to live safely in our digital world.

The post Navigating cybersecurity challenges in the early days of Agentic AI appeared first on McAfee Blog.