Reading view

Three steps to build a data foundation for federal AI innovation

America’s AI Action Plan outlines a comprehensive strategy for the country’s leadership in AI. The plan seeks, in part, to accelerate AI adoption in the federal government. However, there is a gap in that vision: agencies have been slow to adopt AI tools to better serve the public. The biggest barrier to adopting and scaling trustworthy AI isn’t policy or compute power — it’s the foundation beneath the surface. How agencies store, access and govern their records will determine whether AI succeeds or stalls. Those records aren’t just for retention purposes; they are the fuel AI models need to power operational efficiencies through streamlined workflows and uncover mission insights that enable timely, accurate decisions. Without robust digitalization and data governance, federal records cannot serve as the reliable fuel AI models need to drive innovation.

Before AI adoption can take hold, agencies must do something far less glamorous but absolutely essential: modernize their records. Many still need to automate records management, beginning with opening archival boxes, assessing what is inside, and deciding what is worth keeping. This essential process transforms inaccessible, unstructured records into structured, connected datasets that AI models can actually use. Without it, agencies are not just delaying AI adoption, they’re building on a poor foundation that will collapse under the weight of daily mission demands.

If you do not know the contents of the box, how confident can you be that the records aren’t crucial to automating a process with AI? In AI terms, if you enlist the help of a model like OpenAI, the results will only be as good as the digitized data behind it. The greater the knowledge base, the faster AI can be adopted and scaled to positively impact public service. Here is where agencies can start preparing their records — their knowledge base — to lay a defensible foundation for AI adoption.

Step 1: Inventory and prioritize what you already have

Many agencies are sitting on decades’ worth of records, housed in a mix of storage boxes, shared drives, aging databases, and under-governed digital repositories. These records often lack consistent metadata, classification tags or digital traceability, making them difficult to find, harder to govern, and nearly impossible to automate.

This fragmentation is not new. According to NARA’s 2023 FEREM report, only 61% of agencies were rated as low-risk in their management of electronic records — indicating that many still face gaps in easily accessible records, digitalization and data governance. This leaves thousands of unstructured repositories vulnerable to security risks and unable to be fed into an AI model. A comprehensive inventory allows agencies to see what they have, determine what is mission-critical, and prioritize records cleanup. Not everything needs to be digitalized. But everything needs to be accounted for. This early triage is what ensures digitalization, automation and analytics are focused on the right things, maximizing return while minimizing risk.

Without this step, agencies risk building powerful AI models on unreliable data, a setup that undermines outcomes and invites compliance pitfalls.

Step 2: Make digitalization the bedrock of modernization

One of the biggest misconceptions around modernization is that digitalization is a tactical compliance task with limited strategic value. In reality, digitalization is what turns idle content into usable data. It’s the on-ramp to AI driven automation across the agency, including one-click records management and data-driven policymaking.

By focusing on high-impact records — those that intersect with mission-critical workflows, the Freedom of Information Act, cybersecurity enforcement or policy enforcement — agencies can start to build a foundation that’s not just compliant, but future-ready. These records form the connective tissue between systems, workforce, data and decisions.

The Government Accountability Office estimates that up to 80% of federal IT budgets are still spent maintaining legacy systems. Resources that, if reallocated, could help fund strategic digitalization and unlock real efficiency gains. The opportunity cost of delay is increasing exponentially everyday.

Step 3: Align records governance with AI strategy

Modern AI adoption isn’t just about models and computation; it’s about trust, traceability, and compliance. That’s why strong information governance is essential.

Agencies moving fastest on AI are pairing records management modernization with evolving governance frameworks, synchronizing classification structures, retention schedules and access controls with broader digital strategies. The Office of Management and Budget’s 2025 AI Risk Management guidance is clear: explainability, reliability and auditability must be built in from the start.

When AI deployment evolves in step with a diligent records management program centered on data governance, agencies are better positioned to accelerate innovation, build public trust, and avoid costly rework. For example, labeling records with standardized metadata from the outset enables rapid, digital retrieval during audits or investigations, a need that’s only increasing as AI use expands. This alignment is critical as agencies adopt FedRAMP Moderate-certified platforms to run sensitive workloads and meet compliance requirements. These platforms raise the baseline for performance and security, but they only matter if the data moving through them is usable, well-governed and reliable.

Infrastructure integrity: The hidden foundation of AI

Strengthening the digital backbone is only half of the modernization equation. Agencies must also ensure the physical infrastructure supporting their systems can withstand growing operational, environmental, and cybersecurity demands.

Colocation data centers play a critical role in this continuity — offering secure, federally compliant environments that safeguard sensitive data and maintain uptime for mission-critical systems. These facilities provide the stability, scalability and redundancy needed to sustain AI-driven workloads, bridging the gap between digital transformation and operational resilience.

By pairing strong information governance with resilient colocation infrastructure, agencies can create a true foundation for AI, one that ensures innovation isn’t just possible, but sustainable in even the most complex mission environments.

Melissa Carson is general manager for Iron Mountain Government Solutions.

The post Three steps to build a data foundation for federal AI innovation first appeared on Federal News Network.

© Getty Images/iStockphoto/FlashMovie

Twilio Drives CX with Trust, Simple, and Smart

Summary Bullets:

- The combination of omni-channel capability, effective data management, and AI will drive better customer experience.

- As Twilio’s business evolves from CPaaS to customer experience, the company focuses its product development on themes around trust, simple, and smart.

The ability to provide superior customer experience (CX) helps a business gain customer loyalty and a strong competitive advantage. Many enterprises are looking to AI including generative AI (GenAI) and agentic AI to further boost CX by enabling faster resolution and personalized experiences.

Communications platform-as-a-service (CPaaS) vendors offer a platform that focuses on meeting omni-channel channel communications requirements. These players have now integrated a broader set of capabilities to solve CX challenges, involving different touch points including sales, marketing, and customer service. Twilio is one of the major CPaaS vendors that has moved beyond just communications applications programming interfaces (APIs), including contact center (Twilio Flex), customer data management (Segment), and conversational AI. Twilio’s product development has been focusing on three key themes: Trusted, Simple, and Smart. The company has demonstrated these themes through product announcements throughout 2025 and showcased at its SIGNAL events around the world.

Firstly, Twilio is winning customer trust through its scalable and reliable platform (e.g., 99.99% API reliability), working with all major telecom operators in each market (e.g., Optus, Telstra, and Vodafone in Australia). More importantly, it is helping clients win the trust of their customers. With the rising fraud impacting consumers, Twilio has introduced various capabilities including Silent Network Authentication and FIDO-certified passkey as part of its Verify, a user verification product. The company is also promoting the use of branded communications, which has shown to achieve consumer trust and greater willingness to engage with brands. Twilio has introduced branded calling, RCS for branded messaging, Whatsapp Business Calling, and WebRTC for browser.

The second theme is about simplifying developer experience when using the Twilio platform to achieve better CX outcomes. Twilio has long been in the business of giving businesses the ability to reach their customers through a range of communications channels. With Segment (customer data platform), Twilio enables businesses to leverage their data more effectively for gaining customer insights and taking actions. An example is the recent introduction of Event Triggered Journey (general availability in July 2025), which allows the creation of automated marketing workflows to support personalized customer journeys. This can be used to enable a responsive approach for real-time use cases, such as cart abandonment, onboarding flows, and trial-to-paid account journeys. By taking actions to promptly address issues a customer is facing can improve the chance of having a successful transaction, and a happy customer.

The third theme on ‘smart’ is about leveraging AI to make better decisions, enable differentiated experiences, and build stronger customer relationships. Twilio announced two conversational AI updates in May 2025. The first is ‘Conversational Intelligence’ (generally available for voice and private beta for messaging), which analyzes voice calls and text-based conversations and converting them into structured data and insights. This is useful for understanding sentiments, spotting compliance risks, and identifying churn risks. The other AI capability is ‘ConversationRelay’, which enables developers to create voice AI agents using their preferred LLM and integrate with customer data. Twilio is leveraging speech recognition technology and interrupt handling to enable human-like voice agents. Cedar, a financial experience platform for healthcare providers is leveraging ConversationRelay to automate inbound patient billing calls. Healthcare providers receive large volume of calls from patients seeking clarity on their financial obligations. And the use of ConversationRelay enables AI-powered voice agents to provide quick answers and reduce wait times. This provides a better patient experience and quantifiable outcome compared to traditional chatbots. It is also said to reduce costs. The real test is whether such capabilities impact customer experience metrics, such as net promoter score (NPS).

Today, many businesses use Twilio to enhance customer engagement. At the Twilio SIGNAL Sydney event for example, Twilio customers spoke about their success with Twilio solutions. Crypto.com reduced onboarding times from hours to minutes, Lendi Group (a mortgage FinTech company) highlighted the use of AI agents to engage customers after hours, and Philippines Airlines was exploring Twilio Segment and Twilio Flex to enable personalized customer experiences. There was a general excitement with the use of AI to further enhance CX. However, while businesses are aware of the benefits of using AI to improve customer experience, the challenge has been the ability to do it effectively.

Twilio is simplifying the process with Segment and conversational AI solutions. The company is tackling another major challenge around AI security, through the acquisition of Stytch (completed on November 14, 2025), an identity platform for AI agents. AI agent authentication becomes crucial as more agents are deployed and given access to data and systems. AI agents will also collaborate autonomously through protocols such as Model Context Protocol, which can create security risks without an effective identity framework.

It has come a long way from legacy chatbots to GenAI-powered voice agents, and Twilio is not alone in pursuing AI-powered CX solutions. The market is a long way off from providing quantifiable feedback from customers. Technology vendors enabling customer engagement (e.g., Genesys, Salesforce, and Zendesk) have developed AI capabilities including voice AI agents. The collective efforts and competition within the industry will help to drive awareness and adoption. But it is crucial to get the basics right around data management, security, and cost of deploying AI.

XRP On-Chain Velocity Hits Yearly High As Network Activity Explodes

XRP has reclaimed the $2.10 level after a strong rebound across the broader crypto market, signaling renewed confidence following several days of fear, volatility, and sharp pullbacks. Analysts now see the potential for a sustained recovery as momentum returns and buyers show signs of stepping back in. The reclaim of this key level comes at a crucial moment, with traders closely watching whether XRP can build enough strength to challenge higher resistances in the coming sessions.

Adding to the renewed optimism, a new report from CryptoOnchain on CryptoQuant highlights a major spike in XRP Ledger Velocity, marking one of the strongest on-chain signals of 2025. On December 2, the Velocity metric surged to 0.0324, its highest value of the year. Velocity measures how frequently XRP moves across the network, serving as a direct indicator of economic activity, liquidity, and transactional demand.

Such a dramatic rise in Velocity shows that XRP is circulating rapidly among users rather than sitting dormant in wallets. It reflects increased participation from traders, active holders, and possibly even whales, pointing toward heightened engagement on the network.

Network Activity Surges as Velocity Signals Peak 2025 Engagement

According to the CryptoOnchain report, the latest spike in XRP Ledger Velocity indicates a dramatic shift in how XRP is being used across the network. Instead of sitting idle in cold wallets or being held for long-term storage, XRP is rapidly changing hands among market participants. This level of circulation suggests that traders, active users, and possibly whales are driving significantly higher transaction volume than usual.

CryptoOnchain explains that such a strong jump in Velocity typically signals high liquidity and deep participation across the ecosystem. When coins move this quickly, it means the asset is being used in real economic activity—whether for trading, transfers, arbitrage, or strategic repositioning by large holders. This type of behavior often aligns with periods of heightened volatility, increased speculation, or structural shifts in market sentiment.

Regardless of whether price trends upward or downward, the data confirms that the XRP Ledger is entering one of its most active phases of 2025. User engagement has reached a yearly peak, with more participants interacting with the network and more coins circulating than at any point this year.

Such elevated activity often precedes or accompanies major market movements, reinforcing the idea that XRP is transitioning into a more dynamic and liquid phase as the recovery unfolds.

XRP Faces Heavy Resistance in a Weakening Daily Structure

XRP’s daily chart shows an attempted rebound toward the $2.15–$2.20 range, but the broader structure remains pressured by a persistent downtrend. After the sharp sell-off in late October and November—which pushed XRP below the $2.00 level for the first time in months—the asset is now trying to stabilize. The recent bounce reflects short-term buying interest, yet the price still trades below all major moving averages, signaling that bulls have not fully regained control.

The 50-day SMA is currently sloping downward near $2.35, acting as immediate resistance. The 100-day SMA around $2.55 and the 200-day SMA near $2.60 form a stacked barrier above price, confirming a structurally bearish setup. For XRP to build meaningful upside momentum, it must reclaim at least the 50-day SMA and flip it into support—something it has failed to do since late September.

Support remains stable around $2.00–$2.05, where buyers have defended the level repeatedly with long lower wicks. A breakdown below this area could expose XRP to deeper losses toward $1.80. Meanwhile, volume remains muted, suggesting the rebound lacks strong conviction.

Featured image from ChatGPT, chart from TradingView.com

AT&T Extends Deadline for Data Breach Settlement Claims

The deadline for 51 million affected customers to claim compensation from two massive data leaks is now Dec. 18.

The post AT&T Extends Deadline for Data Breach Settlement Claims appeared first on TechRepublic.

AT&T Extends Deadline for Data Breach Settlement Claims

The deadline for 51 million affected customers to claim compensation from two massive data leaks is now Dec. 18.

The post AT&T Extends Deadline for Data Breach Settlement Claims appeared first on TechRepublic.

TikTok to Invest $37B+ Into Brazil Data Center

The announcement highlights China’s broader ambitions in South America at a time of ongoing geopolitical and technological tensions with the US.

The post TikTok to Invest $37B+ Into Brazil Data Center appeared first on TechRepublic.

700Credit Reveals Data Breach

Michigan-based firm says a “bad actor” gained unauthorized access to certain PII housed within the 700Dealer.com application layer.

The post 700Credit Reveals Data Breach appeared first on TechRepublic.

TikTok to Invest $37B+ Into Brazil Data Center

The announcement highlights China’s broader ambitions in South America at a time of ongoing geopolitical and technological tensions with the US.

The post TikTok to Invest $37B+ Into Brazil Data Center appeared first on TechRepublic.

700Credit Reveals Data Breach

Michigan-based firm says a “bad actor” gained unauthorized access to certain PII housed within the 700Dealer.com application layer.

The post 700Credit Reveals Data Breach appeared first on TechRepublic.

Germany Tests Apple’s Privacy Fixes in Critical Market Test

Companies found guilty of breaching Germany's antitrust rules face fines up to 10% of annual turnover.

The post Germany Tests Apple’s Privacy Fixes in Critical Market Test appeared first on TechRepublic.

Germany Tests Apple’s Privacy Fixes in Critical Market Test

Companies found guilty of breaching Germany's antitrust rules face fines up to 10% of annual turnover.

The post Germany Tests Apple’s Privacy Fixes in Critical Market Test appeared first on TechRepublic.

Expert Edition: How to modernize data for mission impact

Federal tech leaders are turning data into mission power.

Deliver faster. Operate smarter. Spend less. That’s the challenge echoing across federal C suites, and data modernization is central to the answer.

In our latest Federal News Network Expert Edition, leaders from across government and industry share how agencies are transforming legacy systems into mission-ready data engines:

- Alyssa Hundrup, health care director at the Government Accountability Office, urges DoD and VA to go beyond “just having agreements” to share health care services and start measuring the impact of these more than 180 agreements: “There’s more … that could really take a data-informed approach.”

- Duncan McCaskill, vice president of data at Maximus, reminds us that governance is everything: “Governance is your policy wrapper. … Data management is the execution of those rules every day. If you give AI terrible data, you’re going to get terrible results.”

- Stuart Wagner, chief data and AI officer at the Navy, calls out the risks of inconsistent classification: “If the line is unclear, they just go, ‘Well, we can’t share.’ ”

- Vice Adm. Karl Thomas, deputy chief of Naval operations for information warfare, highlights the power of AI and open architectures: “Let machines do what machines do best … so humans can make the decisions they need.”

- And from the Office of Personnel Management, a full overhaul of FedScope is underway to make federal workforce data more transparent and actionable.

In every case: Data is the mission driver.

Download the full ebook to explore how these agencies are addressing modernizing their data strategy!

The post Expert Edition: How to modernize data for mission impact first appeared on Federal News Network.

© Federal News Network

Security’s Next Control Plane: The Rise of Pipeline-First Architecture

For years, security operations have relied on monolithic architectures built around centralized collectors, rigid forwarding chains, and a single “system of record” where all data must land before action can be taken. On paper, that design promised simplicity and control. In practice, it delivered brittle systems, runaway ingest costs, and teams stuck maintaining plumbing instead..

The post Security’s Next Control Plane: The Rise of Pipeline-First Architecture appeared first on Security Boulevard.

OpenAI desperate to avoid explaining why it deleted pirated book datasets

OpenAI may soon be forced to explain why it deleted a pair of controversial datasets composed of pirated books, and the stakes could not be higher.

At the heart of a class-action lawsuit from authors alleging that ChatGPT was illegally trained on their works, OpenAI’s decision to delete the datasets could end up being a deciding factor that gives the authors the win.

It’s undisputed that OpenAI deleted the datasets, known as “Books 1” and “Books 2,” prior to ChatGPT’s release in 2022. Created by former OpenAI employees in 2021, the datasets were built by scraping the open web and seizing the bulk of its data from a shadow library called Library Genesis (LibGen).

© wenmei Zhou | DigitalVision Vectors

E-Commerce Firm Coupang Faces Massive Fine After Data Breach

The South Korean company was hit by a data breach that exposed the personal information of 33.7 million users.

The post E-Commerce Firm Coupang Faces Massive Fine After Data Breach appeared first on TechRepublic.

E-Commerce Firm Coupang Faces Massive Fine After Data Breach

The South Korean company was hit by a data breach that exposed the personal information of 33.7 million users.

The post E-Commerce Firm Coupang Faces Massive Fine After Data Breach appeared first on TechRepublic.

XRP Hit By Violent 59% Leverage Flush As Speculators Slam The Brakes

XRP’s derivatives market has undergone a marked regime shift, with leverage collapsing and funding normalising in a way that signals a clear retreat from aggressive speculative positioning. The strongest evidence comes from Glassnode’s latest post on November 30, which frames the current phase as a structural, not merely tactical, pause in XRP leverage.

XRP Derivatives Unwind Accelerates

“XRP’s futures OI has fallen from 1.7B XRP in early October to 0.7B XRP (~59% flush-out). Paired with the funding rate dropping from ~0.01% to 0.001% (7D-SMA), 10/10 marked a structural pause in XRP speculators’ appetite to bet aggressively on upside,” Glassnode’s CryptoVizArt wrote on X.

Open interest at 1.7 billion XRP in early October reflected a heavily leveraged market, with large notional positions stacked in futures and perpetuals. The subsequent collapse to 0.7 billion XRP implies that around one billion XRP of derivatives exposure has been closed, liquidated, or otherwise unwound. Such a reduction is not just a marginal trimming of risk; it is a wholesale deleveraging that strips out a large part of the speculative layer sitting on top of the spot market.

The funding-rate move is equally telling. A 7-day SMA around 0.01% had previously indicated a consistent long bias, with traders willing to pay a recurring fee to maintain leveraged upside exposure. The compression to roughly 0.001% pushes funding close to neutral. In perpetual futures, that transition typically occurs when demand for leveraged longs fades and the market no longer tolerates a meaningful premium to hold long positions.

Glassnode’s description of October 10 crash as the point that “marked a structural pause” captures this shift in regime: the market moved from persistent long crowding to a far more cautious, balanced stance. The November 30 post sits on top of a broader context Glassnode has been documenting through November.

In November 8, the firm highlighted how profit taking has behaved during the recent drawdown: “Unlike previous profit realization waves that aligned with rallies, since late September, as XRP fell from $3.09 (~25%) to $2.30, profit realization volume (7D-SMA) surged by ~240%, from $65M/day to $220M/day. This divergence underscores distribution into weakness, not strength.” Rather than de-risking into strength, profitable holders have been realizing gains as price fell, reinforcing the deleveraging signalled by futures data.

On November 17, Glassnode turned to supply dynamics, noting that “the share of XRP supply in profit has fallen to 58.5%, the lowest since Nov 2024, when price was $0.53. Today, despite trading ~4× higher ($2.15), 41.5% of supply (~26.5B XRP) sits in loss — a clear sign of a top-heavy and structurally fragile market dominated by late buyers.” Those on-chain figures provide the background to the 30 November derivatives snapshot: a market whose ownership is skewed toward late entrants now sits on substantial unrealized losses, while the leverage that previously amplified upside has been largely flushed.

Taken together, Glassnode’s data on futures open interest and funding rates crystallise the current state of XRP: a violent 59% leverage reset, a near-neutral funding regime, and a speculative cohort that has stepped back from paying for upside, all layered on top of a top-heavy holder base.

At press time, XRP traded at $2.04.

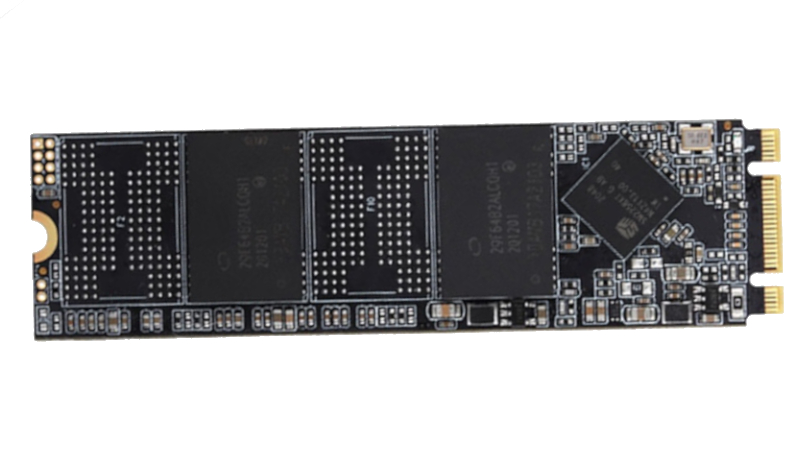

A Friendly Reminder That Your Unpowered SSDs Are Probably Losing Data

Save a bunch of files on a good ol’ magnetic hard drive, leave it in a box, and they’ll probably still be there a couple of decades later. The lubricants might have all solidified and the heads jammed in place, but if you can get things moving, you’ll still have your data. As explained over at [XDA Developers], though, SSDs can’t really offer the same longevity.

It all comes down to power. SSDs are considered non-volatile storage—in that they hold on to data even when power is removed. However, they can only do so for a rather limited amount of time. This is because of the way NAND flash storage works. It involves trapping a charge in a floating gate transistor to store a single bit of data. You can power down an SSD, and the trapped charge in all the NAND flash transistors will happily stay put. But over longer periods of time, from months to years, that charge can leak out. When this happens, data is lost.

Depending on your particular SSD, and the variety of NAND flash it uses (TLC, QLC, etc), the safe storage time may be anywhere from a few months to a few years. The process takes place faster at higher temperatures, too, so if you store your drives in a warm area, you could see surprisingly rapid loss.

Ultimately, it’s worth checking your drive specs and planning accordingly. Going on a two-week holiday? Your PC will probably be just fine switched off. Going to prison for three to five years with only a slim chance of parole? Maybe back up to a hard drive first, or have your cousin switch your machine on now and then for safety’s sake.

On a vaguely related note, we’ve even seen SSDs that can self-destruct on purpose. If you’ve got the low down on other neat solid-state stories, don’t hesitate to notify the tipsline.

Building a Low-Cost Satellite Tracker

Looking up at the sky just after sunset or just before sunrise will reveal a fairly staggering amount of satellites orbiting overhead, from tiny cubesats to the International Space Station. Of course these satellites are always around, and even though you’ll need specific conditions to view them with the naked eye, with the right radio antenna and only a few dollars in electronics you can see exactly which ones are flying by at any time.

[Josh] aka [Ham Radio Crash Course] is demonstrating this build on his channel and showing every step needed to get something like this working. The first part is finding the correct LoRa module, which will be the bulk of the cost of this project. Unlike those used for most Meshtastic nodes, this one needs to be built for the 433 MHz band. The software running on this module is from TinyGS, which we have featured here before, and which allows a quick and easy setup to listen in to these types of satellites. This build goes much further into detail on building the antenna, though, and also covers some other ancillary tasks like mounting it somewhere outdoors.

With all of that out of the way, though, the setup is able to track hundreds of satellites on very little hardware, as well as display information about each of them. We’d always favor a build that lets us gather data like this directly over using something like a satellite tracking app, although those do have their place. And of course, with slightly more compute and a more directed antenna there is all kinds of other data beaming down that we can listen in on as well, although that’s not always the intent.