Pufferfish Venom Can Kill, Or It Can Relieve Pain

Tetrodotoxin (TTX) is best known as the neurotoxin of the puffer fish, though it also appears in a range of other marine species. You might remember it from an episode of The Simpsons involving a poorly prepared dish at a sushi restaurant. Indeed, it’s a potent thing, as ingesting even tiny amounts can lead to death in short order.

Given its fatal reputation, it might be the last thing you’d expect to be used in a therapeutic context. And yet, tetrodotoxin is proving potentially valuable as a treatment option for dealing with cancer-related pain. It’s a dangerous thing to play with, but it could yet hold promise where other pain relievers simply can’t deliver.

Poison, or…?

Humans have been aware of the toxicity of the puffer fish and its eggs for thousands of years. It was much later that tetrodotoxin itself was chemically isolated, thanks to the work of Dr. Yoshizumi Tahara in 1909.

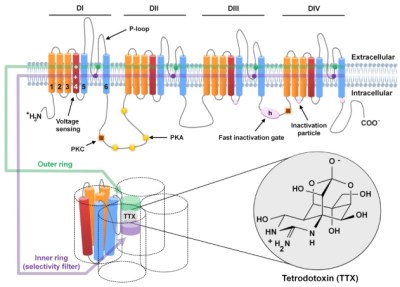

Its method of action was proven in 1964, with tetrodotoxin found to bind to and block voltage-gated sodium channels in nerve cell membranes, essentially stopping the nerves from conducting signals as normal. It thus has the effect of inducing paralysis, up to the point where an afflicted individual suffers respiratory failure, and subsequently, death.

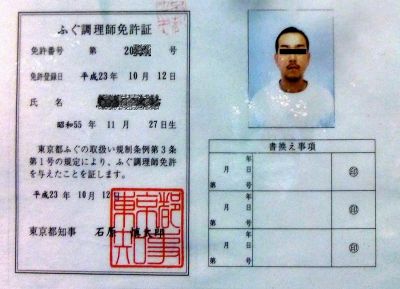

It doesn’t take a large dose of tetrodotoxin to kill, either—the median lethal dose in mice is a mere 334 μg per kilogram when ingested. The lethality of tetrodotoxin was historically a prime driver behind Japanese efforts to specially license chefs who wished to prepare and serve pufferfish. Consuming pufferfish that has been inadequately prepared can lead to symptoms in 30 minutes or less, with death following in mere hours as the toxin makes it impossible for the sufferer to breathe. Notably, though, with the correct life support measures, particularly for the airway, or with a sub-fatal dose, it’s possible for a patient to make a full recovery in mere days, without any lingering effects.

The effects that tetrodotoxin has on the nervous system are precisely what may lend it therapeutic benefit, however. By blocking sodium channels in sensory neurons that deal with pain signals, the toxin could act as a potent method of pain relief. Researchers have recently explored whether it could have particular application for dealing with neuropathic pain caused by cancer or chemotherapy treatments. This pain isn’t always easy to manage with traditional pain relief methods, and can even linger after cancer recovery and when chemotherapy has ceased.

The challenge of using a toxin for pain relief is obvious—there’s always a risk that the negative effects of the toxin will outweigh the supposed therapeutic benefit. In the case of tetrodotoxin, it all comes down to dosage. The levels given to patients in research studies have been on the order of 30 micrograms, well under the multi-milligram dose that would typically cause severe symptoms or death in an adult human. The hope would be to find a level at which tetrodotoxin reduces pain with a minimum of adverse effects, particularly where symptoms like paralysis and respiratory failure are on the table.

A review of various studies worldwide was published in 2023, and highlights that tetrodotoxin pain relief does come with some typical adverse effects, even at tiny clinical doses. The most typical reported symptoms involved nausea, oral numbness, dizziness, and tingling sensations. In many cases, these effects were mild and well-tolerated. A small number of patients in research trials exhibited more serious symptoms, however, such as loss of muscle control, pain, or hypertension. At the same time, the treatment did show positive results — with many patients reporting pain relief for days or even weeks after just a few days of tetrodotoxin injections.

While tetrodotoxin has been studied as a pain reliever for several decades now, it has yet to become a mainstream treatment. There have been no large-scale studies that involved treating more than 200 patients, and no research group or pharmaceutical company has pushed hard to bring a tetrodotoxin-based product to market. Research continues, with a 2025 paper even exploring the use of ultra-low nanogram-scale doses in a topical setting. For now, though, commercial application remains a far-off fantasy. Today, the toxin remains the preserve of pufferfish and a range of other deadly species. Don’t expect to see it in a hospital ward any time soon, despite the promise it shows thus far.

Featured image: “Puffer Fish DSC01257.JPG” by Brocken Inaglory. Actually, not one of the poisonous ones, but it looked cool.