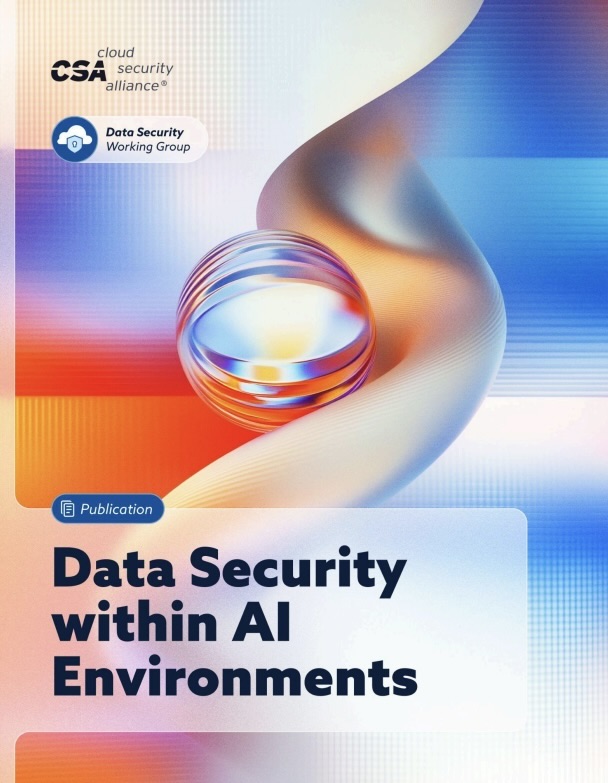

This Year in Scams: A 2025 Retrospective, and a Look Ahead at 2026

They came by phone, by text, by email, and they even weaseled their way into people’s love lives—an entire host of scams that we covered here in our blogs throughout the year.

Today, we look back, picking five noteworthy scams that firmly established new trends, along with one in particular that gives us a hint at the face of scams to come.

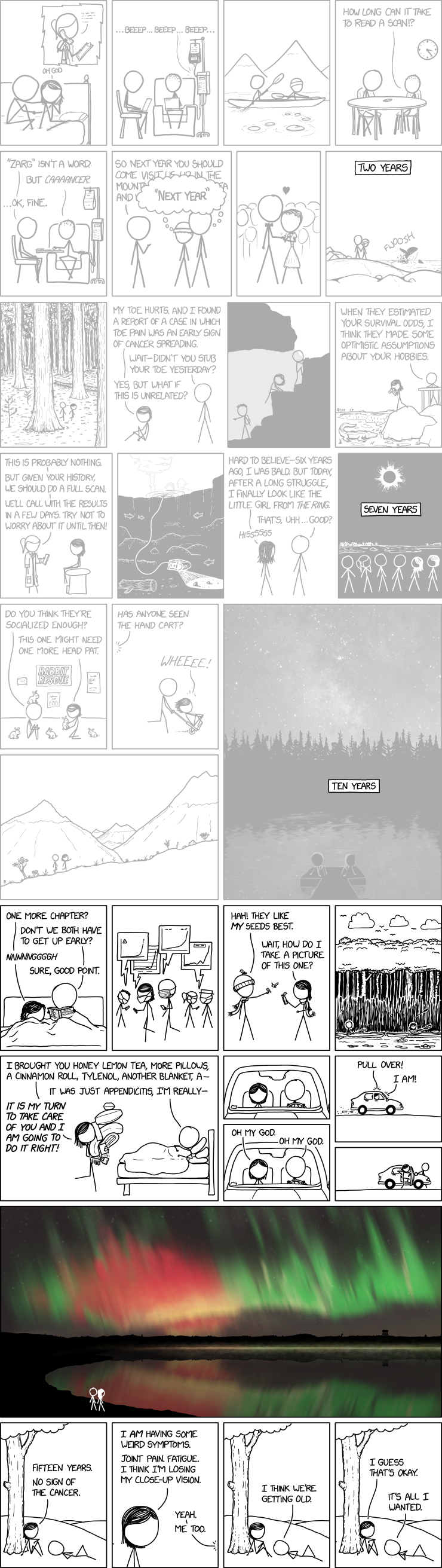

Let’s start it off with one scam that pinged plenty of phones over the spring and summer: those toll road texts.

1 – The Texts That Jammed Everyone’s Phones: The Toll Road Scam

It was the hot new scam of 2025 that increased by 900% in one year: the toll road scam.

There’s a good chance you got a few of these this year,scam texts that say you have an unpaid tab for tolls and that you need to pay right away. And as always, they come with a handy link where you can pay up and avoid that threat of a “late fee.”

Of course, links like those took people to phishing sites where people gave scammers their payment info, which led to fraudulent charges on their cards. In some instances, the scammers took it a step further by asking for driver’s license and Social Security numbers, key pieces of info for big-time identity theft.

Who knows what the hot new text scam for 2026 will be, yet here are several ways you can stop text scams in their tracks, no matter what form they take:

How Can I Stop Text Scams?

Don’t click on any links in unexpected texts (or respond to them, either). Scammers want you to react quickly, but it’s best to stop and check it out.

Check to see if the text is legit. Reach out to the company that apparently contacted you using a phone number or website you know is real—not the info from the text.

Get our Scam Detector. It automatically detects scams by scanning URLs in your text messages. If you accidentally tap or click? Don’t worry, it blocks risky sites if you follow a suspicious link.

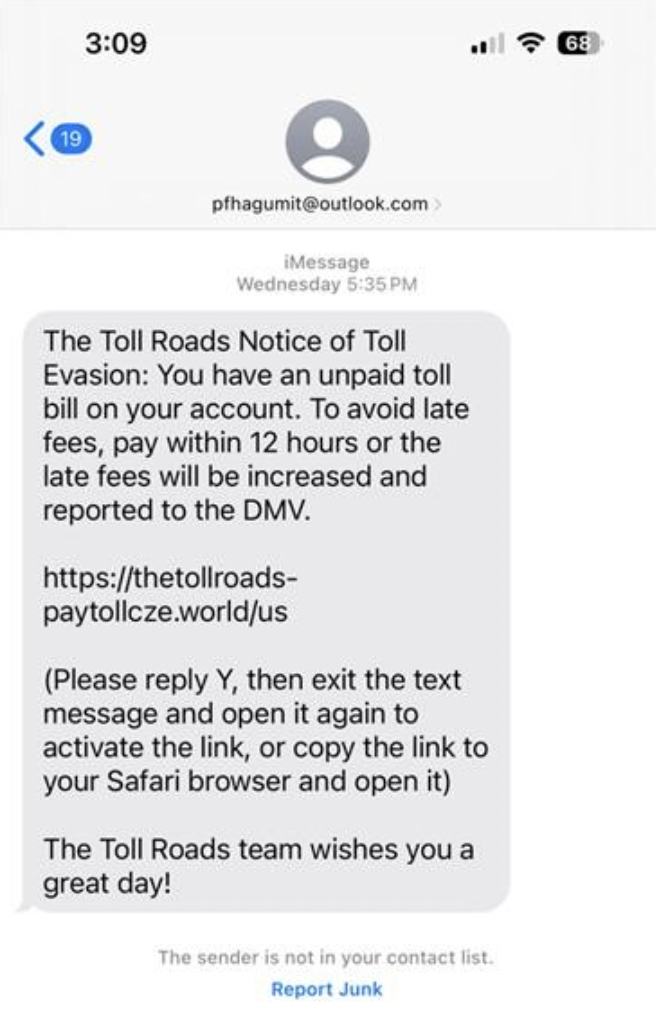

2 – Romancing the Bot: AI Chatbots and Images Finagle Their Way Into Romance Scams

It started with a DM. And a few months later, it cost her $1,200.

Earlier this year, we brought you the story of 25-year-old computer programmer Maggie K. who fell for a romance scam on Instagram. Her story played out like so many. When she and her online boyfriend finally agreed to meet in person, he claimed he missed his flight and needed money to rebook. Desperate to finally see him, she sent the money and never heard from him again.

But here’s the twist—he wasn’t real in the first place.

When she reported the scam to police, they determined his images were all made with AI. In Maggie’s words, “That was the scariest part—I had trusted someone who never even existed.”

Maggie isn’t alone. Our own research earlier this year revealed that more than half (52%) of people have been scammed out of money or pressured to send money or gifts by someone they met online.

Moreover, we found that scammers have fueled those figures with the use of AI. Of people we surveyed, more than 1 in 4 (26%) said they—or someone they know—have been approached by an AI chatbot posing as a real person on a dating app or social media.

We expect this trend will only continue, as AI tools make it easier and more efficient to pull off romance scams on an increasingly larger scale.

Even so, the guidelines for avoiding romance scams remain the same:

- Never send money to someone you’ve never met in person.

- Things move too fast, too soon—like when the other person starts talking about love almost right away.

- They say they live far away and can’t meet in person because they live abroad, all part of a scammers story that they’re there for charity or military service.

- Look out for stories of urgent financial need, such as sudden emergencies or requests for help with travel expenses to meet you.

- Also watch out for people who ask for payment in gift cards, crypto, wire transfers, or other forms of payment that are tough to recover. That’s a sign of a scam.

3 – Paying to Get Paid: The New Job Scam That Raked in Millions

The job offer sounds simple enough … go online, review products, like videos, or do otherwise simple tasks and get paid doing it—until it’s time to get paid.

It’s a new breed of job scam that took root this spring, one where victims found themselves “paying to get paid.”

The FTC dubbed these scams as “gamified job scams” or “task scams.” Given the way these scams work, the naming fits.

It starts with a text or direct message from a “recruiter” offering work with the promise of making good money by “liking” or “rating” sets of videos or product images in an app, all with the vague purpose of “product optimization.” With each click, you earn a “commission” and see your “earnings” rack up in the app. You might even get a payout, somewhere between $5 and $20, just to earn your trust.

Then comes the hook.

Like a video game, the scammer sweetens the deal by saying the next batch of work can “level up” your earnings. But if you want to claim your “earnings” and book more work, you need to pay up. So you make the deposit, complete the task set, and when you try to get your pay the scammer and your money are gone. It was all fake.

This scam and others like it fall right in line with McAfee data that uncovered a spike in job-related scams of 1,000% between May and July,which undoubtedly built on 2024’s record-setting job scam losses of $501 million.

Whatever form they take, here’s how you can avoid job scams:

Step one—ignore job offers over text and social media

A proper recruiter will reach out to you by email or via a job networking site. Moreover, per the FTC, any job that pays you to “like” or “rate” content is against the law. That alone says it’s a scam.

Step two—look up the company

In the case of job offers in general, look up the company. Check out their background and see if it matches up with the job they’re pitching. In the U.S., The Better Business Bureau (BBB) offers a list of businesses you can search.

Step three—never pay to start a job.

Any case where you’re asked to pay to up front, with any form of payment, refuse, whether that’s for “training,” “equipment,” or more work. It’s a sign of a scam.

4 – Seeing is Believing is Out the Window: The Al Roker Deepfake Scam

Prince Harry, Taylor Swift, and now the Today show’s Al Roker, too, they’ve all found themselves as the AI-generated spokesperson for deepfake scams.

In the past, a deepfake Prince Harry pushed bogus investments, while another deepfake of Taylor Swift hawked a phony cookware deal. Then, this spring, a deepfake of Al Roker used his image and voice to promote a bogus hypertension cure—claiming, falsely, that he had suffered “a couple of heart attacks.”

The fabricated clip appeared on Facebook, which appeared convincing enough to fool plenty of people, including some of Roker’s own friends. “I’ve had some celebrity friends call because their parents got taken in by it,” said Roker.

While Meta quickly removed the video from Facebook after being contacted by TODAY, the damage was done. The incident highlights a growing concern in the digital age: how easy it is to create—and believe—convincing deepfakes.

Roker put it plainly, “We used to say, ‘Seeing is believing.’ Well, that’s kind of out the window now.”

In all, this stands as a good reminder to be skeptical of celebrity endorsements on social media. If public figure fronts an apparent deal for an investment, cookware, or a hypertension “cure” in your feed, think twice. And better yet, let our Scam Detector help you spot what’s real and what’s fake out there.

5 – September 2025: The First Agentic AI Attack Spotted in The Wild

And to close things out, a look at some recent news, which also serves as a look ahead.

Last September, researchers spotted something unseen before:a cyberattack almost entirely run by agentic AI.

What is Agentic AI?

Definition: Artificial intelligence systems that can independently plan, make decisions, and work toward specific goals with minimal human intervention; in this way, it executes complex tasks by adapting to new info and situations on its own.

Reported by AI researcher Anthropic, a Chinese state-sponsored group allegedly used the company’s Claude Code agent to automate most of an espionage campaign across nearly thirty organizations. Attackers allegedly bypassed guardrails that typically prevent such malicious use with jailbreaking techniques, which broke down their attacks into small, seemingly innocent tasks. That way, Claude orchestrated a large-scale attack it wouldn’t otherwise execute.

Once operational, the agent performed reconnaissance, wrote exploit code, harvested credentials, identified high-value databases, created backdoors, and generated documentation of the intrusion. By Anthropic’s estimate, they completed 80–90% of the work without any human involvement.

According to Anthropic: “At the peak of its attack, the AI made thousands of requests, often multiple per second—an attack speed that would have been, for human hackers, simply impossible to match.”

We knew this moment was coming, and now the time has arrived: what once took weeks of human effort to execute a coordinated attack now boils down to minutes as agentic AI does the work on someone’s behalf.

In 2026, we can expect to see more attacks led by agentic AI, along with AI-led scams as well, which raises an important question that Anthropic answers head-on:

If AI models can be misused for cyberattacks at this scale, why continue to develop and release them? The answer is that the very abilities that allow Claude to be used in these attacks also make it crucial for cyber defense. When sophisticated cyberattacks inevitably occur, our goal is for Claude—into which we’ve built strong safeguards—to assist cybersecurity professionals to detect, disrupt, and prepare for future versions of the attack.

That gets to the heart of security online: it’s an ever-evolving game. As new technologies arise, those who protect and those who harm one-up each other in a cycle of innovation and exploits. As we’re on the side of innovation here, you can be sure we’ll continue to roll out protections that keep you safer out there. Even as AI changes the game, our commitment remains the same.

Happy Holidays!

We’re taking a little holiday break here and we’ll be back with our weekly roundups again in 2026. Looking forward to catching up with you then and helping you stay safer in the new year.

The post This Year in Scams: A 2025 Retrospective, and a Look Ahead at 2026 appeared first on McAfee Blog.