We're Going Goo Goo Gaga for This Baby Bottle Sterilizer for Just $260 Right Now

![]() Nate Wolf / Barron's Online:

Nate Wolf / Barron's Online:

Shares of Zoom jump 10%+ after analysts at Baird estimated that Zoom's 2023 investment in Anthropic could be worth $2B to $4B, depending on dilution — Key Points — Zoom Communications has been in need of a boost ever since shares of the video-calling company soared—then tanked—during the Covid-19 pandemic.

![]() Mary Ann Azevedo / Crunchbase News:

Mary Ann Azevedo / Crunchbase News:

Zocks, which offers an AI assistant for financial advisers, raised a $45M Series B co-led by Lightspeed and QED Investors, taking its total funding to $65M — Zocks, which has built an AI assistant for financial advisers, has raised $45 million in a Series B funding co-led by Lightspeed Venture Partners …

![]() Robert Hart / The Verge:

Robert Hart / The Verge:

Anthropic rolls out a new extension to MCP to let users interact with apps directly inside the Claude chatbot, with support for Asana, Figma, Slack, and others — Anthropic's one step closer to having an everything app. … Anthropic's Claude got a bit livelier today thanks …

![]() Emma Roth / The Verge:

Emma Roth / The Verge:

UpScrolled, a platform for sharing photos, videos, and text that claims political impartiality, says user growth has surged since TikTok's US takeover — UpScrolled is a platform for sharing photos, videos, and text, and it aims to remain ‘impartial’ to political agendas.

In the three years since ChatGPT’s explosive debut, OpenAI’s technology has upended a remarkable range of everyday activities at home, at work, in schools—anywhere people have a browser open or a phone out, which is everywhere.

Now OpenAI is making an explicit play for scientists. In October, the firm announced that it had launched a whole new team, called OpenAI for Science, dedicated to exploring how its large language models could help scientists and tweaking its tools to support them.

The last couple of months have seen a slew of social media posts and academic publications in which mathematicians, physicists, biologists, and others have described how LLMs (and OpenAI’s GPT-5 in particular) have helped them make a discovery or nudged them toward a solution they might otherwise have missed. In part, OpenAI for Science was set up to engage with this community.

And yet OpenAI is also late to the party. Google DeepMind, the rival firm behind groundbreaking scientific models such as AlphaFold and AlphaEvolve, has had an AI-for-science team for years. (When I spoke to Google DeepMind’s CEO and cofounder Demis Hassabis in 2023 about that team, he told me: “This is the reason I started DeepMind … In fact, it’s why I’ve worked my whole career in AI.”)

So why now? How does a push into science fit with OpenAI’s wider mission? And what exactly is the firm hoping to achieve?

I put these questions to Kevin Weil, a vice president at OpenAI who leads the new OpenAI for Science team, in an exclusive interview last week.

Weil is a product guy. He joined OpenAI a couple of years ago as chief product officer after being head of product at Twitter and Instagram. But he started out as a scientist. He got two-thirds of the way through a PhD in particle physics at Stanford University before ditching academia for the Silicon Valley dream. Weil is keen to highlight his pedigree: “I thought I was going to be a physics professor for the rest of my life,” he says. “I still read math books on vacation.”

Asked how OpenAI for Science fits with the firm’s existing lineup of white-collar productivity tools or the viral video app Sora, Weil recites the company mantra: “The mission of OpenAI is to try and build artificial general intelligence and, you know, make it beneficial for all of humanity.”

The impact on science of future versions of this technology could be amazing, he says: New medicines, new materials, new devices. “Think about it helping us understand the nature of reality, helping us think through open problems. Maybe the biggest, most positive impact we’re going to see from AGI will actually be from its ability to accelerate science.”

He adds, “With GPT-5, we saw that becoming possible.”

As Weil tells it, LLMs are now good enough to be useful scientific collaborators, spitballing ideas, suggesting novel directions to explore, and finding fruitful parallels between a scientist’s question and obscure research papers published decades ago or in foreign languages.

That wasn’t the case a year or so ago. Since it announced its first reasoning model, o1, in December 2024, OpenAI has been pushing the envelope of what the technology can do. “You go back a few years and we were all collectively mind-blown that the models could get an 800 on the SAT,” says Weil.

But soon LLMs were acing math competitions and solving graduate-level physics problems. Last year, OpenAI and Google DeepMind both announced that their LLMs had achieved gold-medal-level performance in the International Math Olympiad, one of the toughest math contests in the world. “These models are no longer just better than 90% of grad students,” says Weil. “They’re really at the frontier of human abilities.”

That’s a huge claim, and it comes with caveats. Still, there’s no doubt that GPT-5 is a big improvement on GPT-4 when it comes to complicated problem-solving. GPT-5 includes a so-called reasoning model, a type of LLM that can break down problems into multiple steps and work through them one by one. This technique has made LLMs far better at solving math and logic problems than they used to be.

Measured against an industry benchmark known as GPQA, which includes more than 400 multiple-choice questions that test PhD-level knowledge in biology, physics, and chemistry, GPT-4 scores 39%, well below the human-expert baseline of around 70%. According to OpenAI, GPT-5.2 (the latest update to the model, released in December) scores 92%.

The excitement is evident—and perhaps excessive. In October, senior figures at OpenAI, including Weil, boasted on X that GPT-5 had found solutions to several unsolved math problems. Mathematicians were quick to point out that in fact what GPT-5 appeared to have done was dig up existing solutions in old research papers, including at least one written in German. That was still useful, but it wasn’t the achievement OpenAI seemed to have claimed. Weil and his colleagues deleted their posts.

Now Weil is more careful. It is often enough to find answers that exist but have been forgotten, he says: “We collectively stand on the shoulders of giants, and if LLMs can kind of accumulate that knowledge so that we don’t spend time struggling on a problem that is already solved, that’s an acceleration all of its own.”

He plays down the idea that LLMs are about to come up with a game-changing new discovery. “I don’t think models are there yet,” he says. “Maybe they’ll get there. I’m optimistic that they will.”

But, he insists, that’s not the mission: “Our mission is to accelerate science. And I don’t think the bar for the acceleration of science is, like, Einstein-level reimagining of an entire field.”

For Weil, the question is this: “Does science actually happen faster because scientists plus models can do much more, and do it more quickly, than scientists alone? I think we’re already seeing that.”

In November, OpenAI published a series of anecdotal case studies contributed by scientists, both inside and outside the company, that illustrated how they had used GPT-5 and how it had helped. “Most of the cases were scientists that were already using GPT-5 directly in their research and had come to us one way or another saying, ‘Look at what I’m able to do with these tools,’” says Weil.

The key things that GPT-5 seems to be good at are finding references and connections to existing work that scientists were not aware of, which sometimes sparks new ideas; helping scientists sketch mathematical proofs; and suggesting ways for scientists to test hypotheses in the lab.

“GPT 5.2 has read substantially every paper written in the last 30 years,” says Weil. “And it understands not just the field that a particular scientist is working in; it can bring together analogies from other, unrelated fields.”

“That’s incredibly powerful,” he continues. “You can always find a human collaborator in an adjacent field, but it’s difficult to find, you know, a thousand collaborators in all thousand adjacent fields that might matter. And in addition to that, I can work with the model late at night—it doesn’t sleep—and I can ask it 10 things in parallel, which is kind of awkward to do to a human.”

Most of the scientists OpenAI reached out to back up Weil’s position.

Robert Scherrer, a professor of physics and astronomy at Vanderbilt University, only played around with ChatGPT for fun (“I used to it rewrite the theme song for Gilligan’s Island in the style of Beowulf, which it did very well,” he tells me) until his Vanderbilt colleague Alex Lupsasca, a fellow physicist who now works at OpenAI, told him that GPT-5 had helped solve a problem he’d been working on.

Lupsasca gave Scherrer access to GPT-5 Pro, OpenAI’s $200-a-month premium subscription. “It managed to solve a problem that I and my graduate student could not solve despite working on it for several months,” says Scherrer.

It’s not perfect, he says: “GTP-5 still makes dumb mistakes. Of course, I do too, but the mistakes GPT-5 makes are even dumber.” And yet it keeps getting better, he says: “If current trends continue—and that’s a big if—I suspect that all scientists will be using LLMs soon.”

Derya Unutmaz, a professor of biology at the Jackson Laboratory, a nonprofit research institute, uses GPT-5 to brainstorm ideas, summarize papers, and plan experiments in his work studying the immune system. In the case study he shared with OpenAI, Unutmaz used GPT-5 to analyze an old data set that his team had previously looked at. The model came up with fresh insights and interpretations.

“LLMs are already essential for scientists,” he says. “When you can complete analysis of data sets that used to take months, not using them is not an option anymore.”

Nikita Zhivotovskiy, a statistician at the University of California, Berkeley, says he has been using LLMs in his research since the first version of ChatGPT came out.

Like Scherrer, he finds LLMs most useful when they highlight unexpected connections between his own work and existing results he did not know about. “I believe that LLMs are becoming an essential technical tool for scientists, much like computers and the internet did before,” he says. “I expect a long-term disadvantage for those who do not use them.”

But he does not expect LLMs to make novel discoveries anytime soon. “I have seen very few genuinely fresh ideas or arguments that would be worth a publication on their own,” he says. “So far, they seem to mainly combine existing results, sometimes incorrectly, rather than produce genuinely new approaches.”

I also contacted a handful of scientists who are not connected to OpenAI.

Andy Cooper, a professor of chemistry at the University of Liverpool and director of the Leverhulme Research Centre for Functional Materials Design, is less enthusiastic. “We have not found, yet, that LLMs are fundamentally changing the way that science is done,” he says. “But our recent results suggest that they do have a place.”

Cooper is leading a project to develop a so-called AI scientist that can fully automate parts of the scientific workflow. He says that his team doesn’t use LLMs to come up with ideas. But the tech is starting to prove useful as part of a wider automated system where an LLM can help direct robots, for example.

“My guess is that LLMs might stick more in robotic workflows, at least initially, because I’m not sure that people are ready to be told what to do by an LLM,” says Cooper. “I’m certainly not.”

LLMs may be becoming more and more useful, but caution is still advised. In December, Jonathan Oppenheim, a scientist who works on quantum mechanics, called out a mistake that made its way into a scientific journal. “OpenAI leadership are promoting a paper in Physics Letters B where GPT-5 proposed the main idea—possibly the first peer-reviewed paper where an LLM generated the core contribution,” Oppenheim posted on X. “One small problem: GPT-5’s idea tests the wrong thing.”

He continued: “GPT-5 was asked for a test that detects nonlinear theories. It provided a test that detects nonlocal ones. Related-sounding, but different. It’s like asking for a COVID test, and the LLM cheerfully hands you a test for chickenpox.”

It is clear that a lot of scientists are finding innovative and intuitive ways to engage with LLMs. It is also clear that the technology makes mistakes that can be so subtle even experts miss them.

Part of the problem is the way ChatGPT can flatter you into letting down your guard. As Oppenheim put it: “A core issue is that LLMs are being trained to validate the user, while science needs tools that challenge us.” In an extreme case, one individual (who was not a scientist) was persuaded by ChatGPT into thinking for months that he’d invented a new branch of mathematics.

Of course, Weil is well aware of the problem of hallucination. But he insists that newer models are hallucinating less and less. Even so, focusing on hallucination might be missing the point, he says.

“One of my teammates here, an ex math professor, said something that stuck with me,” says Weil. “He said: ‘When I’m doing research, if I’m bouncing ideas off a colleague, I’m wrong 90% of the time and that’s kind of the point. We’re both spitballing ideas and trying to find something that works.’”

“That’s actually a desirable place to be,” says Weil. “If you say enough wrong things and then somebody stumbles on a grain of truth and then the other person seizes on it and says, ‘Oh, yeah, that’s not quite right, but what if we—’ You gradually kind of find your trail through the woods.”

This is Weil’s core vision for OpenAI for Science. GPT-5 is good, but it is not an oracle. The value of this technology is in pointing people in new directions, not coming up with definitive answers, he says.

In fact, one of the things OpenAI is now looking at is making GPT-5 dial down its confidence when it delivers a response. Instead of saying Here’s the answer, it might tell scientists: Here’s something to consider.

“That’s actually something that we are spending a bunch of time on,” says Weil. “Trying to make sure that the model has some sort of epistemological humility.”

Another thing OpenAI is looking at is how to use GPT-5 to fact-check GPT-5. It’s often the case that if you feed one of GPT-5’s answers back into the model, it will pick it apart and highlight mistakes.

“You can kind of hook the model up as its own critic,” says Weil. “Then you can get a workflow where the model is thinking and then it goes to another model, and if that model finds things that it could improve, then it passes it back to the original model and says, ‘Hey, wait a minute—this part wasn’t right, but this part was interesting. Keep it.’ It’s almost like a couple of agents working together and you only see the output once it passes the critic.”

What Weil is describing also sounds a lot like what Google DeepMind did with AlphaEvolve, a tool that wrapped the LLM Gemini inside a wider system that filtered out the good responses from the bad and fed them back in again to be improved on. Google DeepMind has used AlphaEvolve to solve several real-world problems.

OpenAI faces stiff competition from rival firms, whose own LLMs can do most, if not all, of the things it claims for its own models. If that’s the case, why should scientists use GPT-5 instead of Gemini or Anthropic’s Claude, families of models that are themselves improving every year? Ultimately, OpenAI for Science may be as much an effort to a flag in new territory as anything else. The real innovations are still to come.

“I think 2026 will be for science what 2025 was for software engineering,” says Weil. “At the beginning of 2025, if you were using AI to write most of your code, you were an early adopter. Whereas 12 months later, if you’re not using AI to write most of your code, you’re probably falling behind. We’re now seeing those same early flashes for science as we did for code.”

He continues: “I think that in a year, if you’re a scientist and you’re not heavily using AI, you’ll be missing an opportunity to increase the quality and pace of your thinking.”

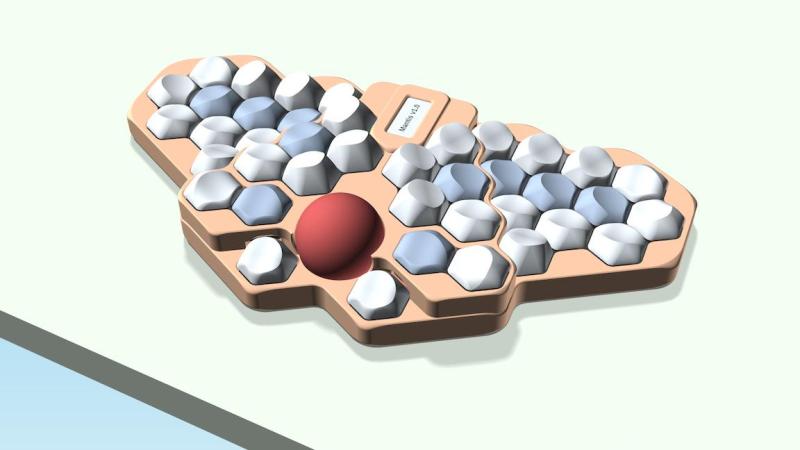

I love, love, love Saturn by [Rain2], which comes in two versions. The first, which is notably more complex, is shown here with its rings-of-Saturn thumb clusters.

Saturn has one built right in. The basic idea was to add a num pad while keeping the total number of keys to a minimum. Thanks to a mod key, this area can be many things, including but not limited to a num pad.

As far as the far-out shape goes, and I love that the curvature covers the thumb cluster and the index finger, [Rain2] wanted to get away from the traditional thumb cluster design. Be sure to check out the back of the boards in the image gallery.

Unfortunately, this version is too complicated to make, so v2 does not have the cool collision shapes going for it. But it is still an excellent keyboard, and perhaps will be open source someday.

Say hello to Phanny, a custom 52-key wireless split from [SfBattleBeagle]. This interestingly-named board has a custom splay that they designed from the ground up along with PCBWay, who sponsored the PCBs in the first place.

While Ergogen is all the rage, [SfBattleBeagle] still opts to use Fusion and KiCad, preferring the UI of the average CAD program. If you’re wondering about the lack of palm rests, the main reason is that [SfBattleBeagle] tends to bounce between screens, as well as moving between the split and the num pad. To that end, they are currently designing a pair of sliding wrist skates that I would love to hear more about.

Be sure to check out the GitHub repo for all the details and a nice build guide. [SfBattleBeagle] says this is a fun project and results in a very comfy board.

Via reddit

Do you rock a sweet set of peripherals on a screamin’ desk pad? Send me a picture along with your handle and all the gory details, and you could be featured here!

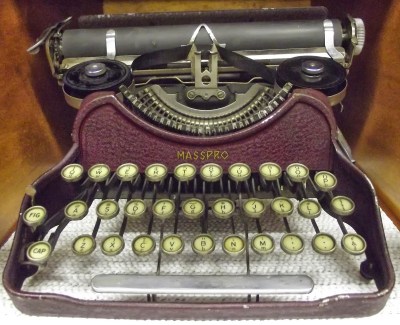

I must say, the Antikey Chop doesn’t have much to say about the Masspro typewriter, and for good reason.

But here’s what we know: the Masspro was invented by a George Francis Rose, who was the son of Frank S. Rose, inventor of the Standard Folding Typewriter. That machine was the predecessor to the Corona No. 3.

Frank died right as the Rose Typewriter Co. was starting to get somewhere. George took over, but then it needed financing pretty badly.

Angel investor and congressman Bill Conger took over the company, relocated, and renamed it the Standard Folding Typewriter Co. According to the Antikey Chop, “selling his father’s company was arguably George’s greatest contribution to typewriter history”.

George Rose was an engineer like his father, but he was not very original when it came to typewriters. The Masspro is familiar yet foreign, and resembles the Corona Four. Although the patent was issued in 1925, production didn’t begin until 1932, and likely ended within to years.

Why? It was the wrong machine at the wrong time. Plus, it was poorly built, and bore a double-shift keyboard which was outdated by this time. And, oh yeah, the company was started during the Depression.

But I like the Masspro. I think my favorite part, aside from the open keyboard, is the logo, which looks either like hieroglyphics or letters chiseled into a stone tablet.

I also like the textured firewall area where the logo is stamped. The Antikey Chop calls this a crinkle finish. Apparently, they came in black, blue, green, and red. The red isn’t candy apple, it’s more of an ox-blood red, and that’s just fine with me. I’d love to see the blue and green, though. Oh, here’s the green.

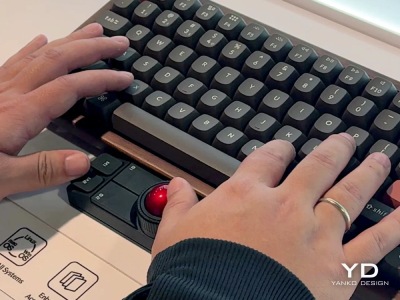

Okay, so Keychron’s new Nape Pro mouse is pretty darn cool, and this is the best picture I could find that actually shows how you’re supposed to implement this thing on your desk. Otherwise, it looks like some kind of presentation remote.

So the idea here is to never take your hands off the keyboard to mouse, although you can use it off to the side like a regular trackball if you want. I say the ability to leave your fingers on the home row is even better.

There are plenty of keyboards with trackpads and other mousing functions that let you do this. But maybe you’re not ready to go that far. This mouse is a nice, easy first step.

The ball is pretty small at 25 mm. For comparison, the M575 uses a 34 mm ball, which is pretty common for trackball mice. Under those six buttons are quiet Huano micro switches, which makes sense, but I personally think loud-ish mice are nice enough.

I’ve never given it much thought, but the switches on my Logitech M575 are nice and clicky. I wonder how these compare, but I don’t see a sound sample. If the Nape Pro switches sound anything like this, then wowsers, that is quiet.

The super-cool part here is the software and orientation system, which they call OctaShift. The thing knows how it’s positioned and can remap its functions to match. M1 and M2 are meant to be your primary mouse buttons, and they are reported to be comfortable to reach in any position.

Inside you’ll find a Realtek chip with a 1 kHz polling rate along with a PixArt PAW3222 sensor, which puts this mouse in the realm of decent wireless gaming mice. But the connectivity choice is yours between dongle, Bluetooth, and USB-C cable.

And check this out: the firmware is ZMK, and Keychron plans to release the case STLs. Finally, it seems the mouse world is catching up with the keyboard world a bit.

Got a hot tip that has like, anything to do with keyboards? Help me out by sending in a link or two. Don’t want all the Hackaday scribes to see it? Feel free to email me directly.

Anthropic's Claude can now present the interfaces of other applications within its chat window, thanks to an extension of the Model Context Protocol (MCP).…

More than 400 tech workers have urged their CEOs to "call the White House and demand ICE leave our cities" after masked federal agents shot and killed Alex Pretti over the weekend and the world's richest and most powerful chief executives remained silent.…

The best iPad deals aren’t the flashy ones. They’re the ones that land on the model people actually use every day for years: streaming, reading, email, schoolwork, travel, and casual creativity. The 11-inch iPad with the A16 chip and 128GB of storage is $299.00, saving you $50 off the $349.00 compared value. It’s not a […]

The post A solid, everyday iPad for under $300 is back on the table appeared first on Digital Trends.

Luxury cars used to feel off-limits—flashy badges, confusing options, and prices that climbed fast. In 2026, that idea feels outdated.